- 首页

- /

- 博客

- /

- AI Development

- /

- ChatGPT Plus vs ChatGPT Pro for Developers: Complete 2025 Comparison with Benchmarks & Code Examples

ChatGPT Plus vs ChatGPT Pro for Developers: Complete 2025 Comparison with Benchmarks & Code Examples

Definitive comparison of ChatGPT Plus ($20) vs Pro ($200) for developers. Includes o3/o4-mini benchmarks, Codex agent analysis, API cost calculations, and code examples to make the right choice.

Nano Banana Pro

4K-80%Google Gemini 3 Pro · AI Inpainting

谷歌原生模型 · AI智能修图

The $180/month question that every developer eventually faces: is ChatGPT Pro actually worth ten times the price of ChatGPT Plus? After spending months testing both tiers across real development workflows—debugging production code, analyzing large codebases, and running extensive code generation sessions—the answer isn't a simple yes or no. It depends entirely on how you work and what limits you're hitting.

Here's the reality that most comparison articles miss: ChatGPT Plus at $20/month and ChatGPT Pro at $200/month are fundamentally different products targeting different developer profiles. Plus gives you access to powerful AI models with reasonable limits that work fine for most developers. Pro removes those limits entirely and adds priority access to features like Codex agent and Deep Research that can transform how power users work.

This guide provides what competitors don't: actual benchmark data (o3 scores 2,706 ELO on Codeforces—top 200 programmers globally), working code examples you can run today, and honest cost analysis including when the API might be cheaper than either subscription. Whether you're a freelancer weighing the ROI or a full-time developer frustrated by message caps, you'll find the data you need to make an informed decision.

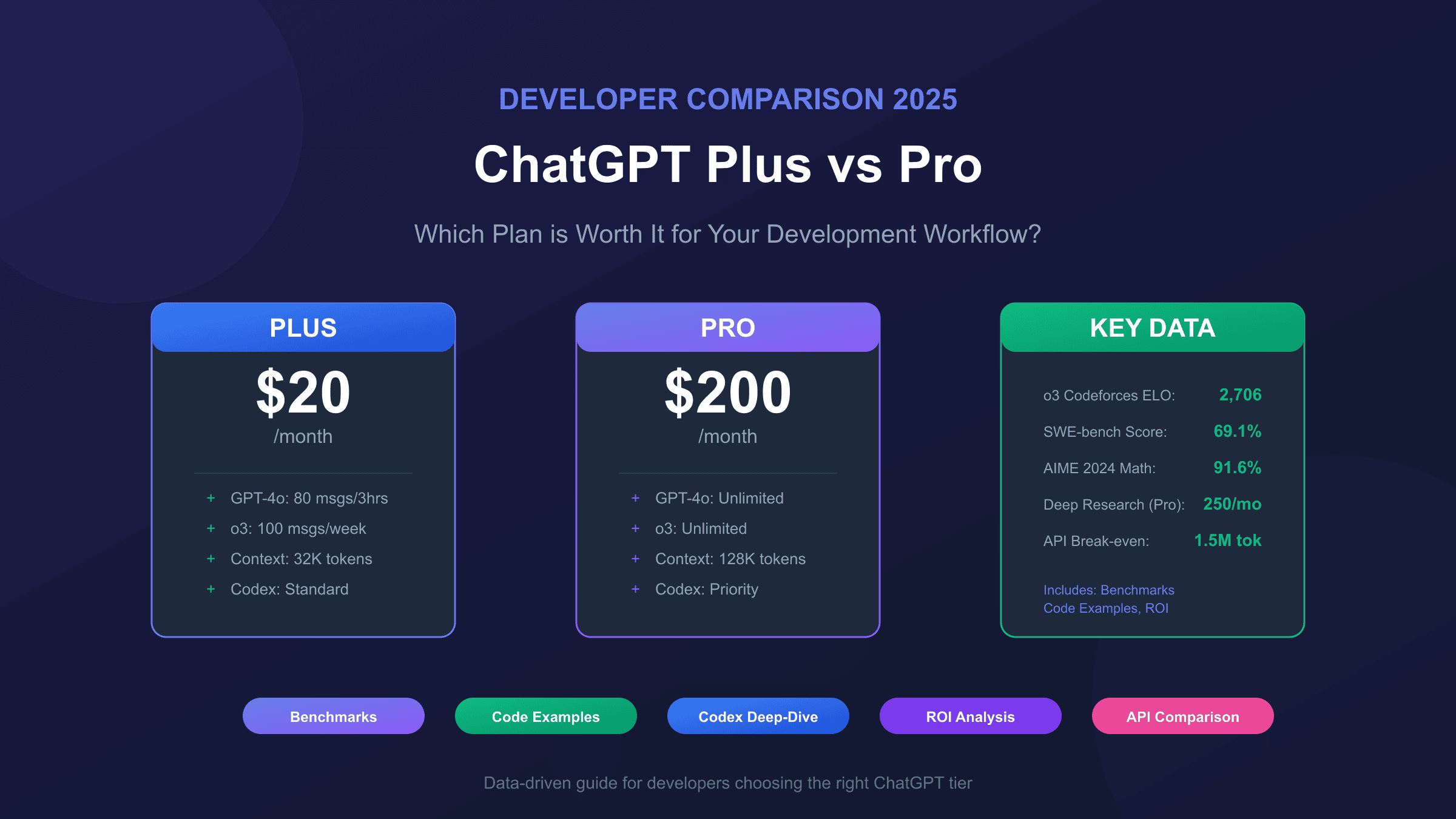

Pricing Breakdown: What $20 vs $200 Actually Gets You

Understanding the true cost difference between ChatGPT Plus and Pro requires looking beyond the headline prices. Both subscriptions are billed monthly with no annual discount option, and critically, neither includes API access—a common misconception that costs developers unnecessary money.

| Plan | Monthly Cost | Annual Cost | API Access | Best Value For |

|---|---|---|---|---|

| ChatGPT Free | $0 | $0 | No | Trying ChatGPT |

| ChatGPT Plus | $20 | $240 | No | Most developers |

| ChatGPT Pro | $200 | $2,400 | No | Power users hitting limits |

| ChatGPT Team | $25-30/user | $300-360/user | No | Small teams |

The 10x price difference between Plus and Pro reflects OpenAI's tiered approach: Plus provides "extended but limited" access to advanced models, while Pro offers "unlimited" access with priority processing. For developers, this translates to concrete differences in daily workflow. A Plus subscriber might hit the 80 messages per 3 hours limit on GPT-4o during an intensive debugging session, forcing a context switch or wait. A Pro subscriber never faces this interruption.

However, the API question deserves emphasis because it trips up so many developers. If you need programmatic access to OpenAI models for building applications, you'll pay separately regardless of your ChatGPT subscription tier. For a detailed walkthrough of how to subscribe to ChatGPT Plus, we've covered the process extensively. The OpenAI API pricing operates on a pay-per-token model completely independent of these subscriptions. A developer running light API usage might spend $5-10/month on tokens while maintaining a $20 Plus subscription for interactive work—a perfectly valid and cost-effective combination.

Feature Comparison: Developer-Specific Capabilities

The feature gap between Plus and Pro matters most in scenarios where developers push AI assistance to its limits. Both tiers share access to core capabilities, but the depth and priority of that access differs significantly in ways that compound over intensive work sessions.

| Feature | ChatGPT Plus | ChatGPT Pro | Developer Impact |

|---|---|---|---|

| GPT-4o/GPT-5 | Extended access | Unlimited | Code generation continuity |

| o3 Reasoning | 100 msgs/week | Unlimited | Complex algorithm design |

| o4-mini | 300 msgs/day | Unlimited | Quick iterations |

| Codex Agent | Standard access | Priority processing | PR generation speed |

| Deep Research | 25 tasks/month | 250 tasks/month | Technical research |

| Context Window | 32K tokens | 128K tokens | Large codebase analysis |

| Advanced Voice | Standard limits | Unlimited | Pair programming |

| Canvas | Full access | Full access | Visual editing |

The context window difference deserves special attention for developers working with substantial codebases. Plus subscribers get 32K tokens (approximately 25,000 words or a medium-sized codebase file), while Pro subscribers get 128K tokens (approximately 100,000 words—enough for an entire microservice). When you need to analyze how a bug propagates across multiple files or understand the architecture of an unfamiliar codebase, this 4x difference becomes the difference between "paste everything at once" and "manually chunk and lose context."

Codex agent access exists in both tiers, but Pro's priority processing means your code generation tasks move to the front of the queue. During peak hours when OpenAI's infrastructure is under heavy load, this can mean the difference between a 2-minute task completion and a 15-minute wait. For developers billing hourly or working under deadlines, that queue priority has concrete dollar value.

Model Access Deep Dive: o3, o4-mini, and When Each Matters

OpenAI's reasoning models—o3 and o4-mini—represent the cutting edge of AI coding assistance, but they come with different access patterns between Plus and Pro that directly impact developer workflows. Understanding these differences requires knowing what each model excels at and how the usage limits translate to real development scenarios.

The o3 model represents OpenAI's most powerful reasoning capability, scoring 2,706 ELO on Codeforces (placing it among the top 200 competitive programmers globally) and achieving 69.1% on SWE-bench Verified for solving real GitHub issues. However, Plus subscribers are limited to 100 messages per week with o3—roughly 14 substantial queries per day if distributed evenly, though most developers use reasoning models in bursts rather than steady streams.

| Model | Plus Weekly Limit | Pro Limit | Primary Strength |

|---|---|---|---|

| o3 | 100 msgs/week | Unlimited | Complex reasoning, architecture |

| o4-mini | 300 msgs/day | Unlimited | Fast iteration, code review |

| o4-mini-high | 100 msgs/day | Unlimited | Balanced speed/quality |

| GPT-4o | 80 msgs/3 hours | Unlimited | General coding tasks |

The practical difference emerges during intensive development phases. Consider debugging a complex distributed system issue: you might need 20-30 reasoning-heavy exchanges in a single afternoon, burning through nearly a third of your weekly o3 allocation on Plus. Pro subscribers can maintain this intensity indefinitely, which matters enormously during crunch periods or when you're deep in flow state on a difficult problem.

o4-mini deserves attention for its remarkable cost-to-performance ratio. Despite being the "mini" model, it scores 2,719 ELO on Codeforces (actually slightly higher than o3) and achieves 68.1% on SWE-bench—nearly matching o3's capability at a fraction of the compute cost. For Plus subscribers managing their reasoning model budget, o4-mini's 300 daily messages often provide sufficient headroom for typical development work while reserving o3 for genuinely complex architectural decisions.

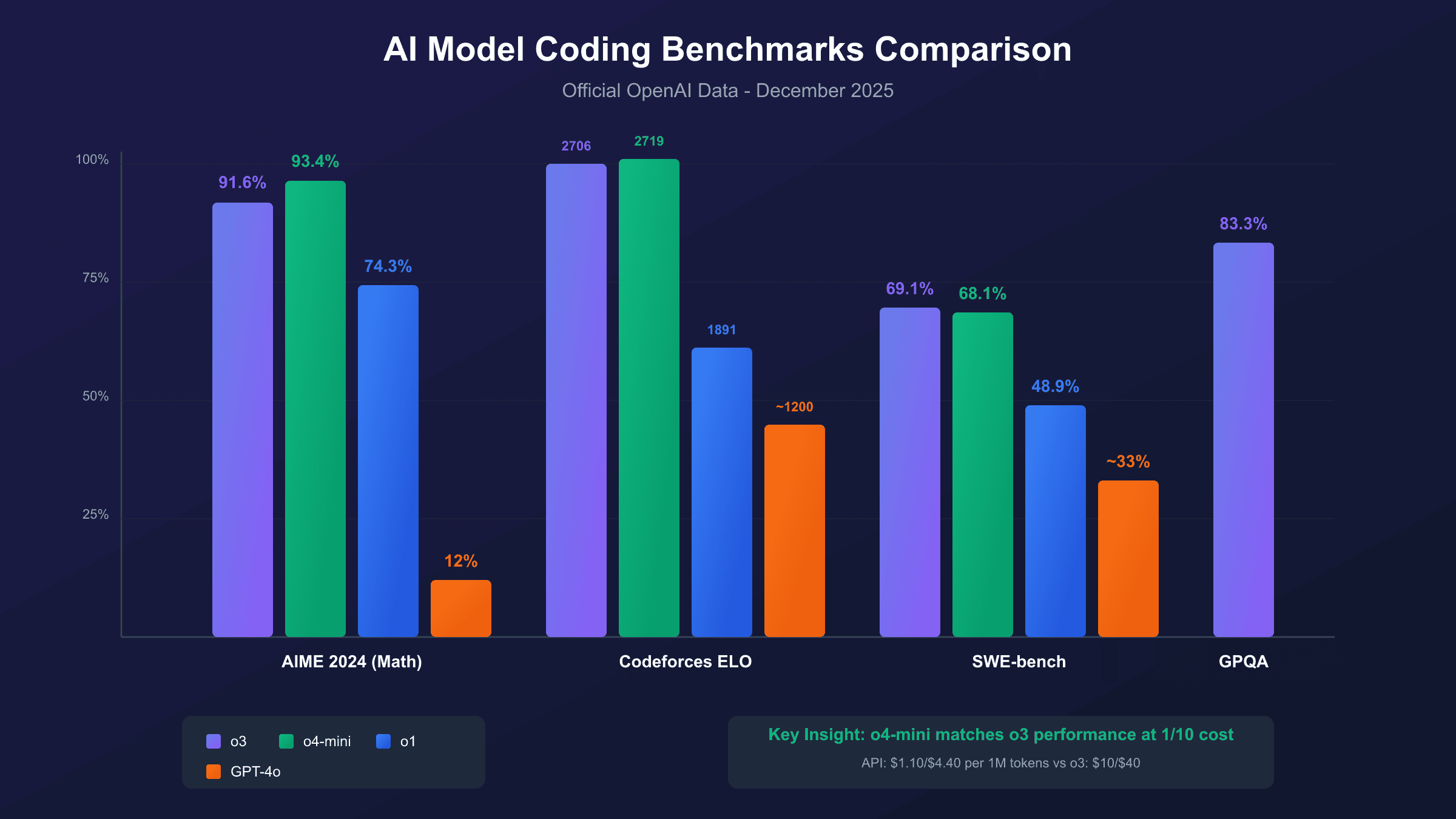

Coding Performance Benchmarks: The Numbers That Actually Matter

Benchmark data provides objective grounding for comparing AI coding assistants, but most articles either omit this data entirely or present it without context. The metrics that matter for developers are competitive programming performance (algorithmic problem-solving), software engineering benchmarks (real-world bug fixing), and mathematical reasoning (which correlates strongly with logical code analysis).

| Benchmark | o3 | o4-mini | o1 | GPT-4o |

|---|---|---|---|---|

| AIME 2024 (Math) | 91.6% | 93.4% | 74.3% | 12% |

| AIME 2025 (Math) | 88.9% | 92.7% | — | — |

| Codeforces ELO | 2,706 | 2,719 | 1,891 | ~1,200 |

| SWE-bench Verified | 69.1% | 68.1% | 48.9% | ~33% |

| GPQA Diamond (Science) | 83.3% | — | — | — |

These numbers tell an important story about model selection. The o3 model's Codeforces ELO of 2,706 exceeds that of OpenAI's Chief Scientist and places it among elite human competitive programmers. For developers facing algorithmic challenges—optimizing a search algorithm, designing an efficient data structure, or solving complex system design problems—o3's reasoning capability provides genuinely superhuman assistance.

The SWE-bench Verified results translate more directly to everyday development work. This benchmark measures whether models can correctly fix real bugs from actual GitHub issues, including understanding context, identifying root causes, and generating correct patches. o3's 69.1% success rate means roughly 7 out of 10 real-world bugs can be solved with AI assistance—a transformative capability for debugging workflows.

hljs python# Example: Using reasoning models for complex debugging

# This pattern works identically on Plus or Pro (within limits)

from openai import OpenAI

client = OpenAI()

def debug_with_reasoning(error_context: str, code_snippet: str) -> str:

"""

Leverage o3's reasoning for complex debugging scenarios.

Note: This uses API, not ChatGPT subscription.

"""

response = client.chat.completions.create(

model="o3", # or "o4-mini" for faster iteration

messages=[

{

"role": "system",

"content": "You are a senior debugging specialist. Analyze the error systematically, considering edge cases and race conditions."

},

{

"role": "user",

"content": f"Error: {error_context}\n\nCode:\n```\n{code_snippet}\n```\n\nIdentify the root cause and provide a fix."

}

]

)

return response.choices[0].message.content

# SWE-bench shows 69.1% of real GitHub bugs solved with this approach

The chart below visualizes how these models compare across different benchmark categories, highlighting the surprisingly strong performance of o4-mini relative to its "mini" designation.

Codex Agent: The Developer's Asynchronous Coding Partner

Codex agent represents one of the most significant differentiators between ChatGPT Plus and Pro for serious developers. Launched in May 2025 and powered by codex-1 (an optimized version of o3 specifically trained for software engineering), Codex operates as an autonomous coding agent that can work on tasks in parallel while you focus on other work.

The fundamental shift Codex introduces is asynchronous development. Rather than the traditional back-and-forth of asking ChatGPT to write code, reviewing it, and iterating, Codex accepts a task—"implement user authentication with OAuth 2.0" or "fix the memory leak in the image processing module"—and works on it independently in a sandboxed cloud environment. Task completion times range from 1 to 30 minutes depending on complexity, during which you can continue other work or step away entirely.

Both Plus and Pro subscribers can access Codex, but with meaningfully different experiences. Pro subscribers receive priority processing, which during peak usage hours can mean the difference between a 3-minute wait and a 20-minute queue. For developers integrating Codex into their daily workflow—using it for PR preparation, automated bug fixes, or exploratory prototyping—this priority access compounds into significant time savings.

Codex's capabilities extend beyond simple code generation. According to TechCrunch's coverage of the launch, Codex can write features, fix bugs, answer codebase questions, run tests iteratively until code passes, and propose pull requests for review. The GitHub integration allows preloading your repository into Codex's workspace, giving it full context for understanding your codebase's patterns and conventions.

hljs bash# Codex CLI installation and basic usage

npm install -g @openai/codex

# Submit a task to Codex (works from any directory in your repo)

codex task "Refactor the authentication middleware to support JWT refresh tokens"

# Check task status

codex status

# The asynchronous nature means you can submit multiple tasks

codex task "Add unit tests for the payment processing module"

codex task "Fix the race condition in the websocket handler"

# Tasks run in parallel cloud sandboxes, each with your repo context

The practical impact for Pro subscribers becomes clear during intensive development phases. Submitting five parallel Codex tasks for different features or fixes, then reviewing completed PRs an hour later, fundamentally changes the development velocity equation. Plus subscribers can achieve similar workflows but with longer queue times and the need to manage their daily task allocations more carefully.

Context Window Impact: Why 32K vs 128K Matters for Real Codebases

The context window—the amount of text a model can "see" and reason about simultaneously—creates one of the most practical differences between Plus and Pro for developers working with non-trivial codebases. Plus provides 32K tokens (approximately 25,000 words), while Pro offers 128K tokens (approximately 100,000 words or 150 pages of content).

To understand the practical impact, consider token counts for typical development artifacts. A single well-documented class file might consume 500-1,500 tokens. A modest microservice's core logic across 10 files could total 15,000-20,000 tokens. The complete context needed to understand a bug that spans configuration, business logic, and data access layers might require 40,000+ tokens—already exceeding the Plus limit.

hljs pythonimport tiktoken

def estimate_codebase_tokens(files: list[str]) -> dict:

"""

Estimate token count for codebase analysis.

Helps determine if Plus (32K) or Pro (128K) context is needed.

"""

encoder = tiktoken.encoding_for_model("gpt-4o")

total_tokens = 0

file_counts = {}

for filepath in files:

with open(filepath, 'r') as f:

content = f.read()

tokens = len(encoder.encode(content))

file_counts[filepath] = tokens

total_tokens += tokens

return {

"total_tokens": total_tokens,

"file_breakdown": file_counts,

"fits_plus": total_tokens <= 32_000,

"fits_pro": total_tokens <= 128_000,

"recommendation": "Plus sufficient" if total_tokens <= 32_000

else "Pro needed" if total_tokens <= 128_000

else "Chunking required"

}

# Example output for a typical FastAPI microservice:

# {"total_tokens": 47000, "fits_plus": False, "fits_pro": True,

# "recommendation": "Pro needed"}

The workaround for Plus subscribers hitting context limits involves manually chunking code and losing cross-file context—exactly the kind of fragmented interaction that reduces AI assistance effectiveness. When debugging a subtle issue, the ability to paste an entire service layer, its tests, and its dependencies in a single context window often determines whether AI assistance produces a correct fix or a plausible-sounding but wrong suggestion.

Pro's 128K window handles most single-service codebases comfortably. The 4x increase over Plus means you can analyze approximately 80-100 source files simultaneously, enough for comprehensive architectural reviews, large refactoring projects, or understanding unfamiliar codebases without the cognitive overhead of managing context manually.

Usage Limits That Actually Affect Developer Workflow

Beyond the headline feature lists, the daily and weekly usage limits embedded in each tier create the real friction points that developers encounter. Understanding these limits in terms of actual development patterns—not abstract message counts—reveals when Plus becomes constraining and when Pro's "unlimited" access provides genuine value.

| Model | Plus Limit | Pro Limit | Typical Developer Usage |

|---|---|---|---|

| GPT-4o | 80 msgs/3 hours | Unlimited | 30-50 msgs/coding session |

| o3 | 100 msgs/week | Unlimited | 5-15 msgs/complex problem |

| o4-mini | 300 msgs/day | Unlimited | 50-100 msgs/review session |

| Deep Research | 25 tasks/month | 250 tasks/month | 2-5 tasks/research topic |

The GPT-4o limit of 80 messages per 3 hours sounds generous until you're deep in a debugging session. Each code refinement, each "try this approach instead," each "now add error handling"—these conversational turns consume your allocation quickly. A focused 2-hour pair programming session with AI assistance might involve 40-60 exchanges, leaving slim margin before hitting the limit.

The weekly o3 allocation creates more strategic planning requirements for Plus subscribers. With 100 messages for the entire week, you learn to reserve o3 for genuinely complex reasoning tasks—architectural decisions, algorithm optimization, system design—while using o4-mini or GPT-4o for routine coding assistance. This mental overhead of "is this worth an o3 message?" adds friction that Pro subscribers never experience.

Deep Research limits impact developers differently depending on work patterns. The 25 monthly tasks on Plus work fine for occasional technical research—evaluating a new framework, understanding a complex specification, preparing for a technical interview. But developers doing continuous research-heavy work (technical writing, competitive analysis, architecture decision records) burn through 25 tasks quickly and find Pro's 250-task allocation essential.

API vs ChatGPT Subscription: Developer Economics

One of the most important decisions developers face isn't Plus vs Pro—it's whether the ChatGPT subscription model fits their workflow at all, compared to direct API access. The economics vary dramatically based on usage patterns, and understanding the break-even points prevents overpaying for the wrong access model.

The fundamental difference: ChatGPT subscriptions provide an interface with usage limits, while API access provides programmatic endpoints with pay-per-token pricing. For the same underlying models, these represent completely different cost structures.

| Usage Profile | API Monthly Cost | Plus ($20) | Pro ($200) | Best Choice |

|---|---|---|---|---|

| Light (100K tokens/mo) | $0.50-2 | Overpaying | Way overpaying | API |

| Moderate (1M tokens/mo) | $5-15 | Good value | Overpaying | Plus |

| Heavy (5M tokens/mo) | $25-75 | Hitting limits | Good value | Pro or API |

| Extreme (20M+ tokens/mo) | $100-400 | Impossible | Unlimited | Pro wins |

The calculation depends on your specific model usage. GPT-4o API pricing runs approximately $2.50-5 per million input tokens and $10-15 per million output tokens. o4-mini offers remarkably cost-effective pricing at $1.10 per million input and $4.40 per million output—making it the optimal choice for high-volume programmatic usage. At these rates, a developer using 2 million tokens monthly (substantial usage) would spend roughly $10-25 on API—less than a Plus subscription.

However, API usage requires building or maintaining tooling around the raw endpoints. The ChatGPT interface provides conversation history, file uploads, code execution, and the Codex agent without additional development work. For interactive assistance during development, the subscription model's convenience often justifies its premium over raw API access.

For developers needing API access alongside interactive chat, platforms like laozhang.ai offer OpenAI-compatible endpoints at competitive rates, with particular advantages for developers in regions where direct OpenAI access is restricted. The API aggregator model provides 200+ models through a single endpoint with pricing similar to official rates—useful when you need programmatic access without maintaining separate provider relationships.

hljs python# Cost tracking example for API usage

from openai import OpenAI

import tiktoken

def track_api_cost(messages: list, model: str = "gpt-4o") -> dict:

"""

Calculate actual API cost for a conversation.

Helps compare against subscription value.

"""

encoder = tiktoken.encoding_for_model(model)

# Token counts

input_tokens = sum(len(encoder.encode(m["content"])) for m in messages)

# Pricing per million tokens (approximate, check current rates)

pricing = {

"gpt-4o": {"input": 2.50, "output": 10.00},

"o3": {"input": 10.00, "output": 40.00},

"o4-mini": {"input": 1.10, "output": 4.40},

}

rates = pricing.get(model, pricing["gpt-4o"])

estimated_output = input_tokens * 1.5 # Typical ratio

cost = (input_tokens * rates["input"] +

estimated_output * rates["output"]) / 1_000_000

return {

"input_tokens": input_tokens,

"estimated_output": estimated_output,

"cost_usd": round(cost, 4),

"monthly_projection": round(cost * 30, 2) # If this were daily usage

}

# Example: A substantial debugging session

# {"input_tokens": 15000, "cost_usd": 0.0375, "monthly_projection": 1.13}

# Far below the $20 Plus subscription for light users

Deep Research: Technical Analysis at Scale

Deep Research emerged as one of ChatGPT Pro's standout features for developers who need to synthesize information across large numbers of sources. Powered by o3's reasoning capabilities, Deep Research operates like a research analyst that can sift through hundreds of sources, cross-reference findings, and produce structured reports—tasks that traditionally consume hours of manual effort.

The feature's value for developers manifests in several scenarios. Technical due diligence on a new framework—"analyze the security implications and performance characteristics of adopting GraphQL Federation in our microservices architecture"—becomes a 10-minute Deep Research task rather than a half-day reading expedition. Competitive analysis, API documentation synthesis, and technology landscape mapping all benefit from Deep Research's ability to gather, filter, and synthesize at scale.

Plus subscribers receive 25 Deep Research tasks monthly, which works for occasional research needs but constrains developers doing continuous research-heavy work. Pro's 250-task allocation (10x higher) removes this constraint entirely, enabling developers to use Deep Research liberally—for every technology decision, every architecture review, every "should we adopt this library" question.

The quality difference between asking ChatGPT a question directly versus running a Deep Research task is substantial. Standard chat draws on the model's training data. Deep Research actively browses the web, reads documentation, compares sources, and can even ask clarifying questions before delivering a report. For rapidly evolving technology topics where training data staleness matters, this real-time research capability often produces more accurate and current information.

ROI Analysis: When Pro Actually Pays for Itself

The $200/month Pro price tag requires justification in concrete business terms. For developers who bill hourly or whose time has quantifiable value, the calculation becomes straightforward: does Pro save enough time to offset its cost compared to Plus or free alternatives?

| Scenario | Hours Saved/Month | Hourly Value | Pro ROI | Verdict |

|---|---|---|---|---|

| Freelancer ($100/hr) | 2+ hours | $200+ | Positive | Pro justified |

| Employee (equivalent) | 3+ hours | $150+ | Positive | Advocate for Pro |

| Hobbyist | N/A | $0 | Negative | Stick with Plus |

| Team lead | 4+ hours | $400+ | Very positive | Team subscription |

The time savings compound in several ways. Eliminated queue waits during peak hours (Pro priority processing) save 5-15 minutes per intensive session. Avoided context-switching when hitting Plus limits preserves flow state worth 20-30 minutes of recovery time per interruption. Codex priority processing means submitted tasks complete faster, reducing the cognitive overhead of tracking pending work.

For developers billing $75-150/hour—common rates for senior contractors and consultants—Pro's break-even point arrives at roughly 1.5-3 hours of saved time monthly. Given that a single avoided limit interruption during client work can cost 30 minutes of billable time, Pro subscribers working with clients often recoup the subscription cost within the first week of each month.

The calculation shifts for employed developers who don't directly bill hours. Here, the justification becomes productivity enhancement worth advocating for to employers. If Pro's unlimited access enables an extra feature shipped per sprint or reduces debugging time by 10%, the $200/month cost becomes negligible against typical developer salaries—essentially a high-ROI tooling expense comparable to IDE licenses or cloud development environments.

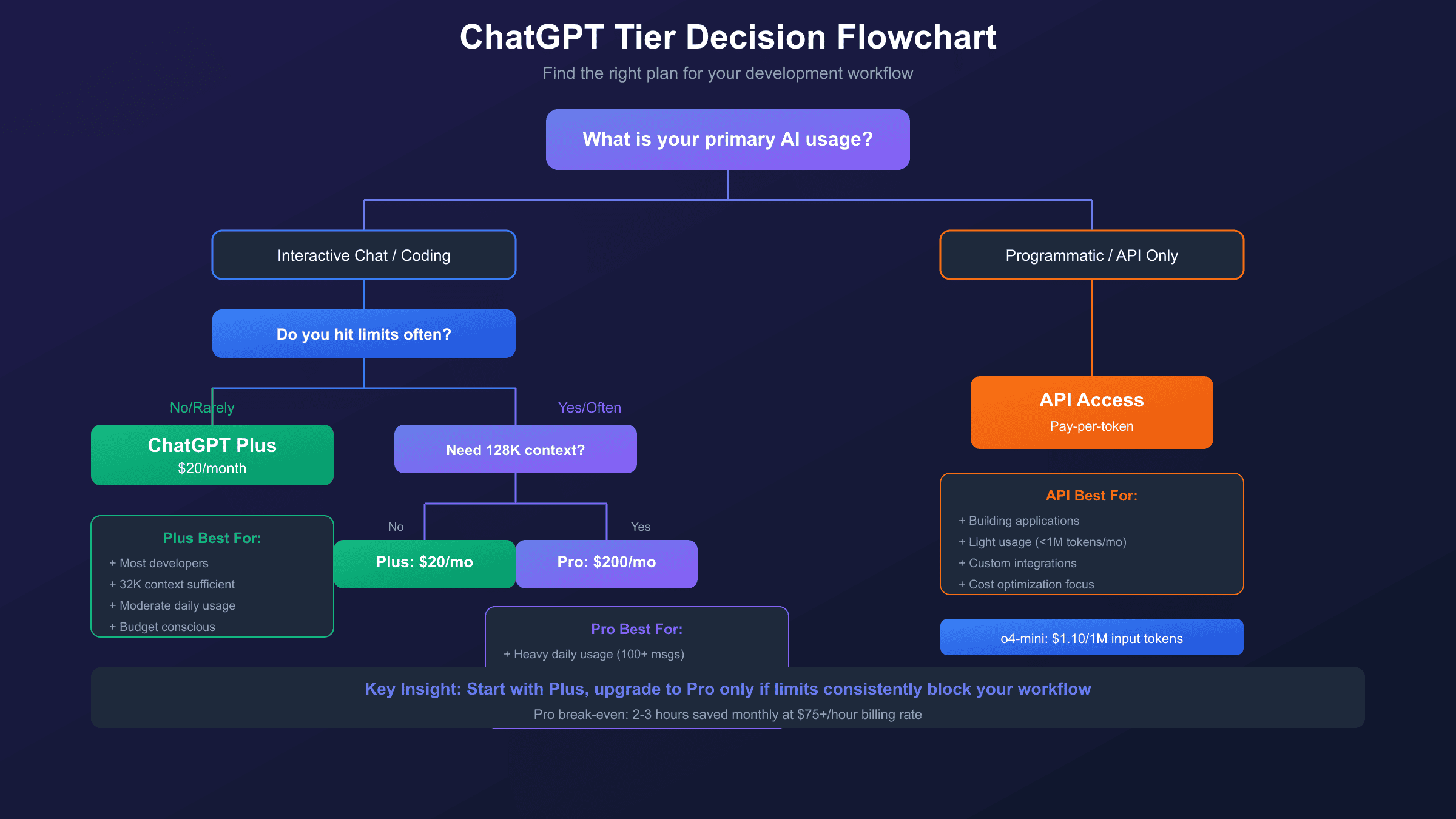

Who Should Choose Which: A Decision Framework

After examining features, benchmarks, costs, and workflows, the recommendation crystallizes into clear personas. The right choice depends less on budget alone and more on work patterns, usage intensity, and how AI assistance integrates into your development process.

ChatGPT Plus ($20/month) suits developers who:

Working patterns that fit Plus well include moderate daily usage with natural breaks, projects where 32K context window suffices (single-file or small multi-file work), occasional rather than constant o3 reasoning model needs, and Codex usage for individual tasks rather than parallel workflows. The typical Plus developer might interact with ChatGPT 20-40 times daily, primarily using GPT-4o and o4-mini with occasional o3 queries for complex problems.

Plus also works well for developers exploring AI assistance before committing to higher tiers. The $20 price point allows genuine evaluation of how AI tools fit into your workflow without significant financial risk. Many developers discover that Plus limits rarely constrain them, while others quickly identify that they need Pro's headroom.

ChatGPT Pro ($200/month) suits developers who:

Pro becomes worthwhile when you regularly hit Plus limits during work sessions, when large codebase analysis (40K+ tokens) is routine rather than exceptional, when Deep Research would replace substantial manual research time, when Codex parallel task execution would transform your workflow, or when client-facing work means any interruption has concrete dollar cost.

The typical Pro developer works intensively with AI assistance throughout the day, potentially running 100+ model interactions across various tasks. They've likely already used Plus and found specific limits frustrating—the o3 weekly cap during a complex architecture week, the context window forcing manual chunking of a debugging session, or the Deep Research allocation exhausted mid-project.

Neither subscription (API-only) suits developers who:

Pure API access makes sense for programmatic use cases with minimal interactive chat needs, for cost-optimization with light usage (under 1M tokens/month), for building applications that integrate AI rather than using AI as a tool, or for developers who prefer custom interfaces over ChatGPT's web UI. The API model offers maximum flexibility at potentially lower cost, but requires more setup and lacks the convenience features of the ChatGPT interface.

Cost-Effective Alternatives for Developers

Beyond the Plus vs Pro binary, developers have several alternatives worth considering—either as primary AI assistance or as supplements to a ChatGPT subscription. The ecosystem of AI coding tools has matured significantly, offering specialized capabilities that sometimes exceed ChatGPT's generalist approach.

Direct API access remains the most flexible option for developers comfortable with programmatic integration. The o4-mini model's pricing ($1.10/$4.40 per million input/output tokens) delivers near-o3 performance at roughly 10% of the cost. Building a simple CLI tool around the API can replicate much of ChatGPT's conversational functionality while providing complete control over model selection, context management, and cost tracking.

Anthropic's Claude Pro ($20/month) provides a direct alternative with different strengths. Claude excels at nuanced code review, long-form technical writing, and careful reasoning about edge cases. The 200K context window exceeds even ChatGPT Pro's 128K, making Claude particularly suited for large codebase analysis. Many developers maintain both subscriptions, using each for its strengths.

GitHub Copilot ($10-19/month depending on tier) focuses specifically on in-editor code completion rather than conversational assistance. The integration with VS Code and JetBrains IDEs provides real-time suggestions that ChatGPT's interface can't match. Copilot and ChatGPT serve complementary purposes—Copilot for line-by-line assistance, ChatGPT for architectural discussion and debugging analysis.

For developers needing API access with additional benefits, aggregator platforms like laozhang.ai offer unified access to 200+ models through a single endpoint. The practical advantages include simplified billing across providers, access from regions where direct provider connections are restricted (particularly valuable for developers in Asia), and competitive pricing that often matches or beats official rates. This approach works well when you need programmatic flexibility without managing multiple provider relationships.

| Alternative | Monthly Cost | Strength | Best Combined With |

|---|---|---|---|

| API Direct (o4-mini) | $5-50 | Maximum flexibility | Plus for chat |

| Claude Pro | $20 | Long context, nuance | Plus for Codex |

| GitHub Copilot | $10-19 | In-editor completion | Any ChatGPT tier |

| API Aggregator | $5-100+ | Multi-model access | Plus or standalone |

Frequently Asked Questions

Does ChatGPT Plus or Pro include API access?

No—this is the most common misconception about ChatGPT subscriptions. Both Plus and Pro provide access only through the ChatGPT web interface, mobile apps, and desktop applications. API access is billed separately through OpenAI's platform pricing, calculated per token used. A developer might reasonably maintain both a $20 Plus subscription for interactive assistance and a separate API account for programmatic integration, with the API costs varying based on actual usage. The subscription and API are fundamentally different products sharing the same underlying models.

Can I switch between Plus and Pro month-to-month?

Yes, OpenAI allows upgrading or downgrading between subscription tiers at any time. Your subscription history, conversation logs, and custom GPTs remain intact across tier changes. This flexibility enables a practical evaluation strategy: start with Plus, identify whether you're hitting limits that matter for your workflow, and upgrade to Pro only when the constraints become genuinely costly. Downgrading works similarly if Pro's additional capacity proves unnecessary.

How does Codex agent differ between Plus and Pro?

Both tiers access the same Codex agent capabilities—writing features, fixing bugs, answering codebase questions, and proposing PRs. The difference lies in processing priority. Pro subscribers' Codex tasks move to the front of the processing queue, resulting in faster completion times especially during peak usage hours. For developers submitting multiple parallel Codex tasks as part of their workflow, this priority access can reduce total wait time significantly. Plus subscribers experience the same functionality with longer queue times during busy periods.

Is the 128K Pro context window worth the price difference alone?

For developers routinely working with large codebases, the context window difference alone can justify Pro's cost. The Plus limit of 32K tokens forces manual chunking for any analysis spanning more than roughly 20-25 typical source files. This chunking loses cross-file context that's often crucial for understanding bugs, planning refactors, or reviewing architecture. If your regular work involves files totaling more than 32K tokens, and context fragmentation costs you debugging time or accuracy, Pro's 128K window provides concrete time savings. For smaller projects or file-by-file work, Plus's context typically suffices.

Should my company pay for Pro subscriptions for the development team?

The business case is straightforward: if Pro saves each developer 2+ hours monthly, it costs less than the salary time it recovers. For a developer earning $150K annually (roughly $72/hour fully loaded), Pro's $200/month break-even point arrives at under 3 hours of saved time. Given that Pro eliminates limit interruptions, speeds Codex processing, and enables large-codebase analysis, most intensive developers save that time within the first week. Teams should also consider ChatGPT Team ($25-30/user/month) which provides enhanced collaboration features at a lower per-seat cost than individual Pro subscriptions.

Conclusion: Making the Right Choice for Your Development Workflow

The ChatGPT Plus vs Pro decision ultimately reduces to a honest assessment of your usage patterns. Plus at $20/month delivers remarkable AI coding assistance that satisfies most developers' needs—access to GPT-4o, o3, o4-mini, Codex agent, and the full feature set with reasonable limits. The 32K context window handles typical file-by-file work. The weekly o3 allocation covers normal reasoning model needs. For the majority of developers, Plus provides genuine value without the 10x Pro price tag.

Pro earns its $200/month when you're among the power users who genuinely need unlimited access. If you regularly exhaust Plus limits during intensive coding sessions, if large codebase analysis is routine rather than exceptional, if Deep Research would replace hours of manual research, if Codex priority processing has concrete value for your deadlines—then Pro's cost becomes a productivity investment rather than an expense. The developers who benefit most from Pro already know they need it, having run into Plus limits often enough that the constraints have quantifiable impact.

For those uncertain, the path is clear: start with Plus, work with it genuinely for a month, and track when you hit limits. If those limit encounters cost you flow state, deadline stress, or workaround time, upgrade. If Plus limits rarely constrain your actual work patterns, you've found your tier. And remember that API access exists independently—for programmatic needs, direct API integration often proves more cost-effective than either subscription tier, especially with efficient models like o4-mini offering near-premium performance at budget pricing.