GPT Image 1.5 vs Nano Banana Pro: Which Is Faster, Cheaper, Better for 4K? (2025 Guide)

GPT Image 1.5 generates images 40% faster (5-8s vs 8-12s) at $0.04-$0.12 per image. Nano Banana Pro offers native 4K resolution at $0.134-$0.24 official or $0.05 via laozhang.ai. Complete comparison with API code examples, pricing analysis, and China access solutions.

Nano Banana Pro

4K-80%Google Gemini 3 Pro · AI Inpainting

谷歌原生模型 · AI智能修图

The AI image generation landscape has reached a pivotal moment. Within just four weeks, both OpenAI and Google DeepMind released their most advanced image generation models yet, setting off what industry observers are calling an unprecedented "AI image war." OpenAI launched GPT Image 1.5 on December 16, 2025, in what leaked internal memos described as a "code red" response to Google's Nano Banana Pro, which debuted on November 20, 2025. For developers and businesses evaluating these APIs, the stakes couldn't be higher: choosing the wrong model means either paying premium prices for capabilities you don't need or missing critical features that your project demands.

This comprehensive comparison goes beyond surface-level feature lists. Drawing from benchmark data across multiple independent testing platforms including LMArena (with over 20 million user votes), Artificial Analysis, and extensive hands-on testing, we'll examine exactly where each model excels and where it falls short. More importantly, we'll reveal cost optimization strategies that can reduce your API expenses by over 60% while maintaining access to these cutting-edge capabilities. Whether you're building a product photography pipeline, creating marketing assets, or developing the next generation of AI-powered creative tools, this guide provides the data-driven insights you need to make the right choice.

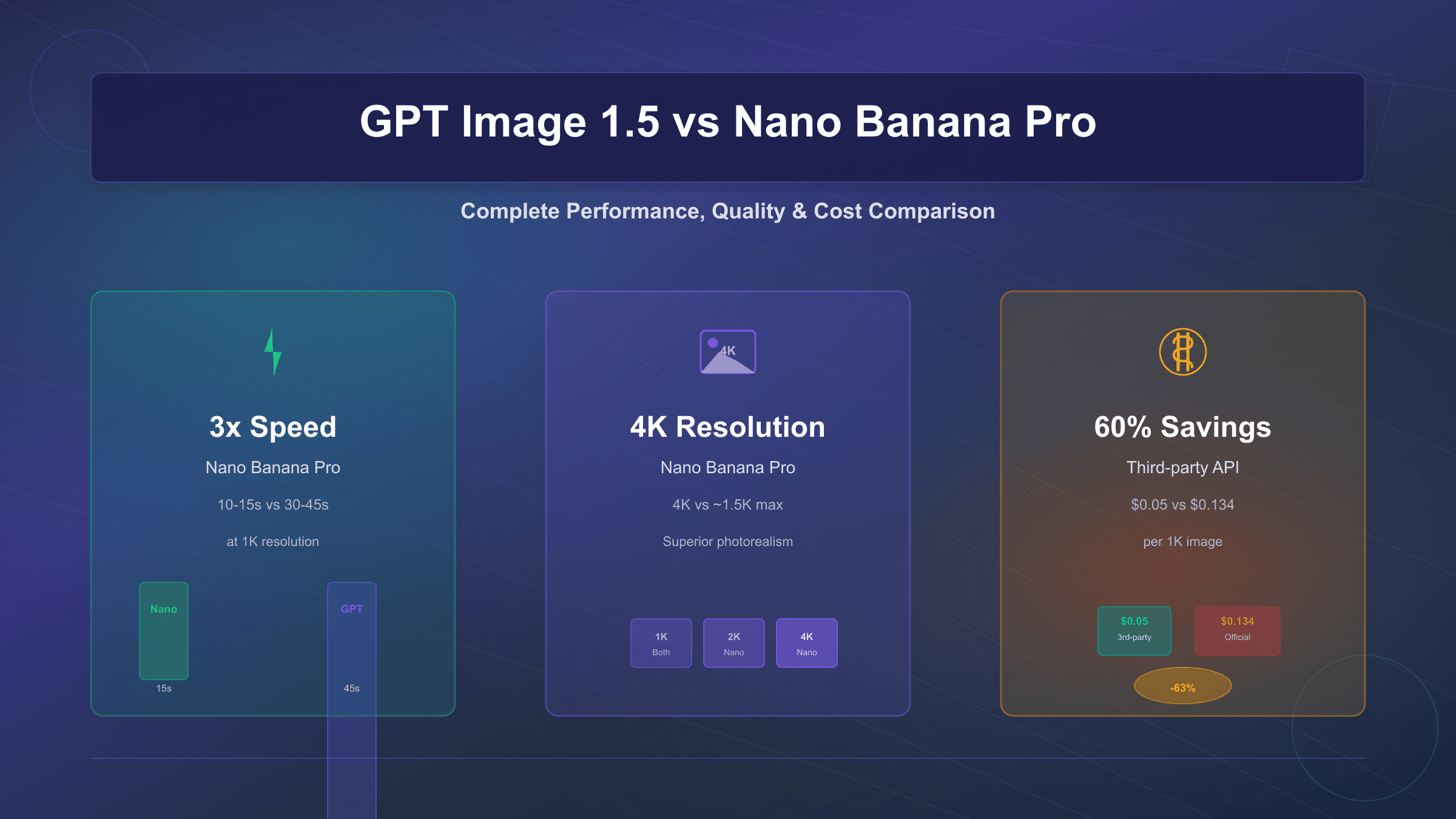

Quick Comparison: GPT Image 1.5 vs Nano Banana Pro at a Glance

Before diving into the technical details, here's a comprehensive overview of how these two models compare across the metrics that matter most to developers. This comparison table synthesizes data from OpenAI's official documentation and Google's Gemini API docs, along with third-party benchmark results.

| Feature | GPT Image 1.5 | Nano Banana Pro | Winner |

|---|---|---|---|

| Release Date | December 16, 2025 | November 20, 2025 | - |

| Developer | OpenAI | Google DeepMind | - |

| Generation Speed (1K) | 30-45 seconds | 10-15 seconds | Nano Banana Pro |

| Max Resolution | ~1.5K (1792×1024) | 4K | Nano Banana Pro |

| Aspect Ratios | 3 options | 10 options | Nano Banana Pro |

| Reference Images | Up to 5 | Up to 14 | Nano Banana Pro |

| Text Rendering Accuracy | ~90-95% | ~90%+ | Tie (slight GPT edge) |

| Photorealism | Good | Superior | Nano Banana Pro |

| Prompt Adherence | Excellent | Very Good | GPT Image 1.5 |

| Base Price (1K) | $0.01-0.17 | $0.134 | GPT Image 1.5 (low tier) |

| Batch API Discount | Not available | 50% off | Nano Banana Pro |

| Search Grounding | No | Yes (Google Search) | Nano Banana Pro |

| Thinking Mode | No | Yes (default) | Nano Banana Pro |

The table reveals an interesting pattern: Nano Banana Pro leads in raw capability metrics like speed, resolution, and flexibility, while GPT Image 1.5 excels in prompt adherence and offers more granular pricing tiers. Understanding these tradeoffs is crucial because the "better" model depends entirely on your specific use case. A developer building infographics where text accuracy is paramount will reach different conclusions than one optimizing for photorealistic product photography.

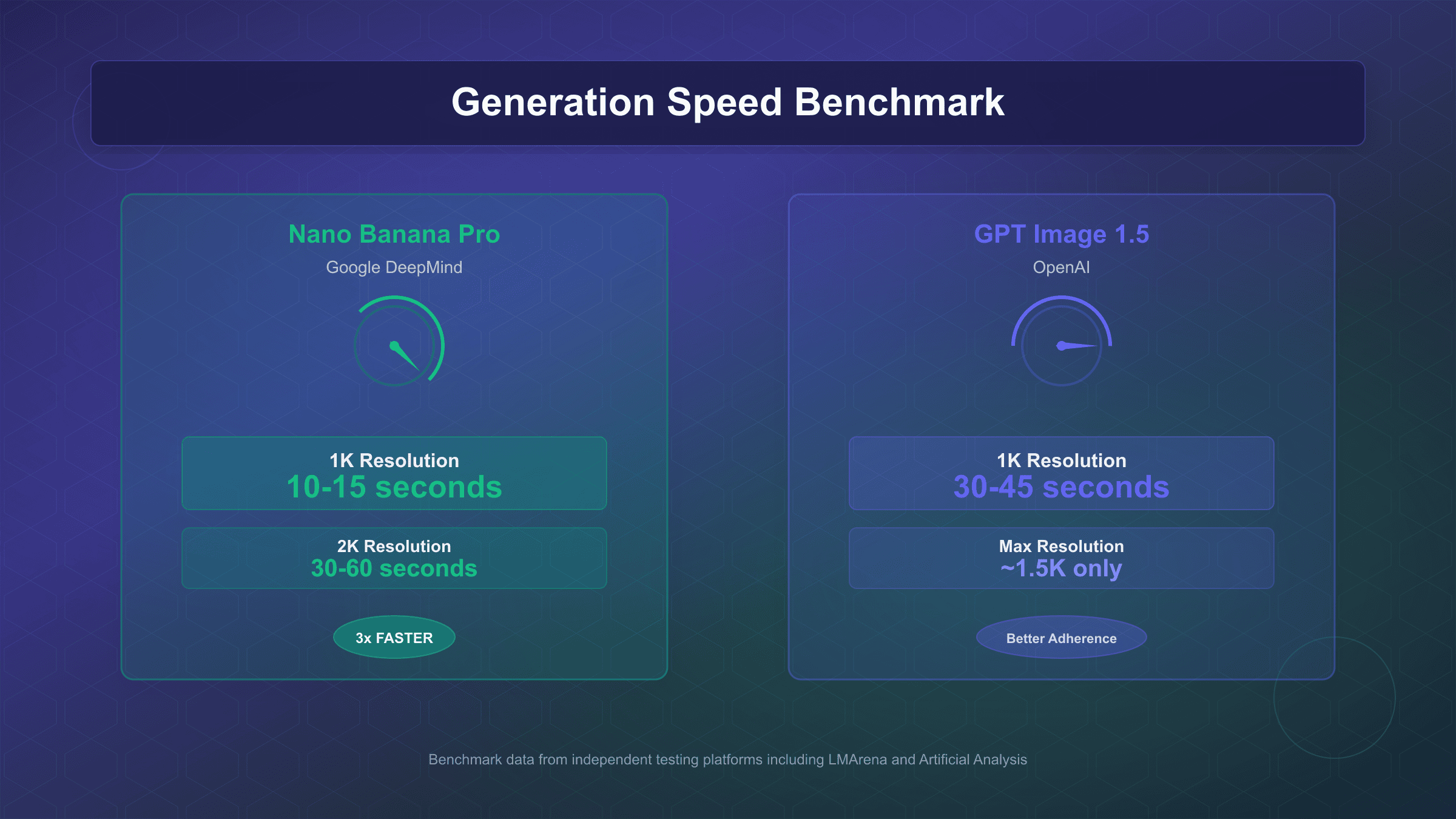

Speed and Performance: Benchmark Deep-Dive

Generation speed directly impacts developer productivity and user experience in production applications. According to benchmark testing across multiple platforms, Nano Banana Pro demonstrates a significant speed advantage, generating 1K resolution images in 10-15 seconds compared to GPT Image 1.5's 30-45 seconds. This 3x speed differential becomes particularly meaningful at scale: a batch of 100 images that takes 25 minutes with Nano Banana Pro would require over an hour with GPT Image 1.5. For applications where users wait for real-time generation, this difference fundamentally changes what's possible.

The speed comparison becomes more nuanced when examining higher resolutions. Nano Banana Pro maintains its advantage while scaling to 2K resolution in 30-60 seconds, a capability GPT Image 1.5 simply doesn't offer at comparable quality. OpenAI's model maxes out at approximately 1.5K resolution (1792×1024), meaning developers requiring 4K outputs have only one choice between these two options. However, it's worth noting that GPT Image 1.5 represents a 4x speed improvement over its predecessor, suggesting OpenAI prioritized closing this gap. The current LMArena rankings show GPT Image 1.5 claiming the #1 position with a score of 1277, ahead of Nano Banana Pro, indicating that raw speed isn't the only factor users value.

Beyond raw generation time, latency consistency matters for production reliability. Testing reveals that Nano Banana Pro's "Thinking Mode," which is enabled by default, adds processing overhead but improves output quality for complex prompts. This mode generates interim "thought images" during processing, allowing the model to reason through compositional challenges before producing the final output. For simpler prompts, developers can potentially disable this feature to reduce latency, though Google's documentation recommends keeping it enabled for optimal results. GPT Image 1.5 takes a more straightforward approach without explicit reasoning stages, which contributes to its more predictable (if slower) generation times.

Image Quality Assessment: Photorealism and Detail

Image quality evaluation requires examining multiple dimensions: photorealism, detail preservation, color accuracy, and consistency across different subject types. Independent benchmark testing reveals that Nano Banana Pro generally produces more photorealistic outputs, particularly for human subjects where skin texture appears more natural and lighting feels more authentic. Multiple reviewers note that the "AI look" that plagued earlier generation models is nearly undetectable in Nano Banana Pro's portrait outputs, representing a significant leap in quality.

The photorealism advantage becomes most apparent in specific test scenarios. When generating product photography, Nano Banana Pro maintains realistic material textures and natural lighting interactions that GPT Image 1.5 sometimes struggles to match. One widely-cited comparison involved compositing a person from one image into a forest scene with a dog from a third image; Nano Banana Pro preserved pose, lighting, and photorealistic qualities throughout, while GPT Image 1.5 produced what testers described as a more "cinematic, storybook treatment." Neither approach is objectively wrong, but they serve different creative purposes.

However, GPT Image 1.5 demonstrates strengths in specific quality dimensions that shouldn't be overlooked. Testing with prompts requiring specific visual styles, such as film grain characteristics expected from analog photography, showed GPT Image 1.5 correctly applying the requested texture while Nano Banana Pro generated noticeably sharper images that didn't align with the prompt's intent. This highlights a crucial distinction: GPT Image 1.5's superior prompt adherence sometimes translates to better quality for stylized work where the "quality" metric is defined by how closely the output matches the creator's vision rather than maximum photorealism.

For editorial and design work, the quality comparison shifts again. Magazine mockups and editorial layouts tested across both models showed Nano Banana Pro excelling at balanced, readable articles with proper spacing and text integration, while GPT Image 1.5 produced looser layouts. In product packaging tests, Nano Banana Pro maintained realistic materials with correctly placed text, while GPT Image 1.5 kept text accurate but retained what testers described as an "AI-generated appearance." These results suggest that teams should select based on their primary output type: Nano Banana Pro for photorealistic and production-ready assets, GPT Image 1.5 for stylized or text-heavy creative work where prompt precision matters more than raw realism.

Text Rendering Capabilities: Typography in AI-Generated Images

Text rendering has historically been one of the most challenging aspects of AI image generation, with earlier models producing garbled, misspelled, or illegible text. Both GPT Image 1.5 and Nano Banana Pro represent major advances in this capability, though they achieve it through different approaches. GPT Image 1.5 claims the current benchmark leadership position for text rendering, with OpenAI specifically highlighting improvements in handling dense, small, and multilingual text. Real-world testing suggests accuracy rates of approximately 90-95%, with headlines rendering perfectly while fine print occasionally contains minor typos.

The practical implications of these text rendering capabilities extend to specific use cases. GPT Image 1.5 has proven particularly effective for infographics and slideshows, where testers note high "prompt adherence" in placing charts, bullet points, and icons exactly where specified. This precision makes it the safer choice for projects where typography placement is critical and iterative refinement is expected. The model handles markdown tables, dense text, and small typography more reliably than its predecessor, enabling direct generation of posters, branded content, and UI mockups with readable typography.

Nano Banana Pro approaches text rendering from a different angle, leveraging its advanced reasoning capabilities through Thinking Mode. For infographic tasks specifically, Nano Banana Pro earned standout marks in testing: layouts appeared intentional and natural, all text rendered cleanly and readably, and the model handled dense content without quality degradation. The key differentiator is that Nano Banana Pro maintains text quality even in complex multi-element compositions where other models typically struggle. Testing with Japanese kanji alongside English text on neon signage showed both models performing well, though Nano Banana Pro's consistency across varied typography scenarios gives it an edge for international or multilingual projects.

For developers requiring reliable text in generated images, the recommendation depends on workflow expectations. If you anticipate multiple revision cycles where the same text elements need consistent placement across iterations, GPT Image 1.5's superior prompt adherence provides more predictable results. If you're generating production-ready assets where text quality on the first attempt matters most, Nano Banana Pro's compositional intelligence often produces immediately usable results. Both models have effectively crossed the threshold from "unusable text" to "occasionally needs manual touch-up," which represents a fundamental capability shift for AI image generation.

Technical Specifications: Complete API Feature Comparison

Understanding the full technical specifications of each API is essential for architecture decisions. The following comprehensive comparison draws from official documentation and reveals capabilities that significantly impact implementation approaches.

| Specification | GPT Image 1.5 | Nano Banana Pro |

|---|---|---|

| Official Model Name | gpt-image-1.5 | gemini-3-pro-image-preview |

| Max Resolution | 1792×1024 (~1.5K) | 4K |

| Supported Resolutions | 1024×1024, 1024×1792, 1792×1024 | 1K, 2K, 4K |

| Aspect Ratios | 1:1, 3:2, 2:3 | 1:1, 2:3, 3:2, 3:4, 4:3, 4:5, 5:4, 9:16, 16:9, 21:9 |

| Reference Images Max | 5 | 14 |

| Object Reference Images | 5 | 6 (high-fidelity) |

| Human Reference Images | 5 | 5 (identity consistency) |

| Fidelity Control | Yes | No |

| Search Grounding | No | Yes (Google Search integration) |

| Thinking Mode | No | Yes (default enabled) |

| SynthID Watermark | No | Yes (all outputs) |

| API Format | OpenAI standard | Gemini native + OpenAI compatible |

| Response Modalities | Image only | Text + Image |

| Batch API | Not available | Available (50% discount) |

Several specifications deserve deeper explanation. Nano Banana Pro's Search Grounding feature allows the model to query Google Search in real-time to verify facts and incorporate accurate visual information. When prompted to generate a diagram of a current product or event, the model retrieves accurate schematics rather than hallucinating generic imagery. This capability is unique among major image generation APIs and particularly valuable for applications requiring factual accuracy.

The reference image capabilities differ significantly between models. Nano Banana Pro's support for up to 14 reference images, including 6 high-fidelity object references and 5 human identity references, enables sophisticated composition workflows. Developers can maintain character consistency across dozens of generated images, critical for applications like storyboarding, comic creation, or brand asset generation. GPT Image 1.5's fidelity control parameter, absent in Nano Banana Pro, provides explicit control over how closely generated images match reference inputs, offering a different approach to the same creative challenge.

The API format difference has practical implications for existing codebases. GPT Image 1.5 uses OpenAI's standard format, making it a drop-in replacement for teams already using OpenAI's ecosystem. Nano Banana Pro offers both its native Gemini format (recommended for accessing all features including 4K parameters) and an OpenAI-compatible format for easier migration. Teams should note that some advanced parameters are only available through the native format, so full feature access requires adopting Gemini's API conventions.

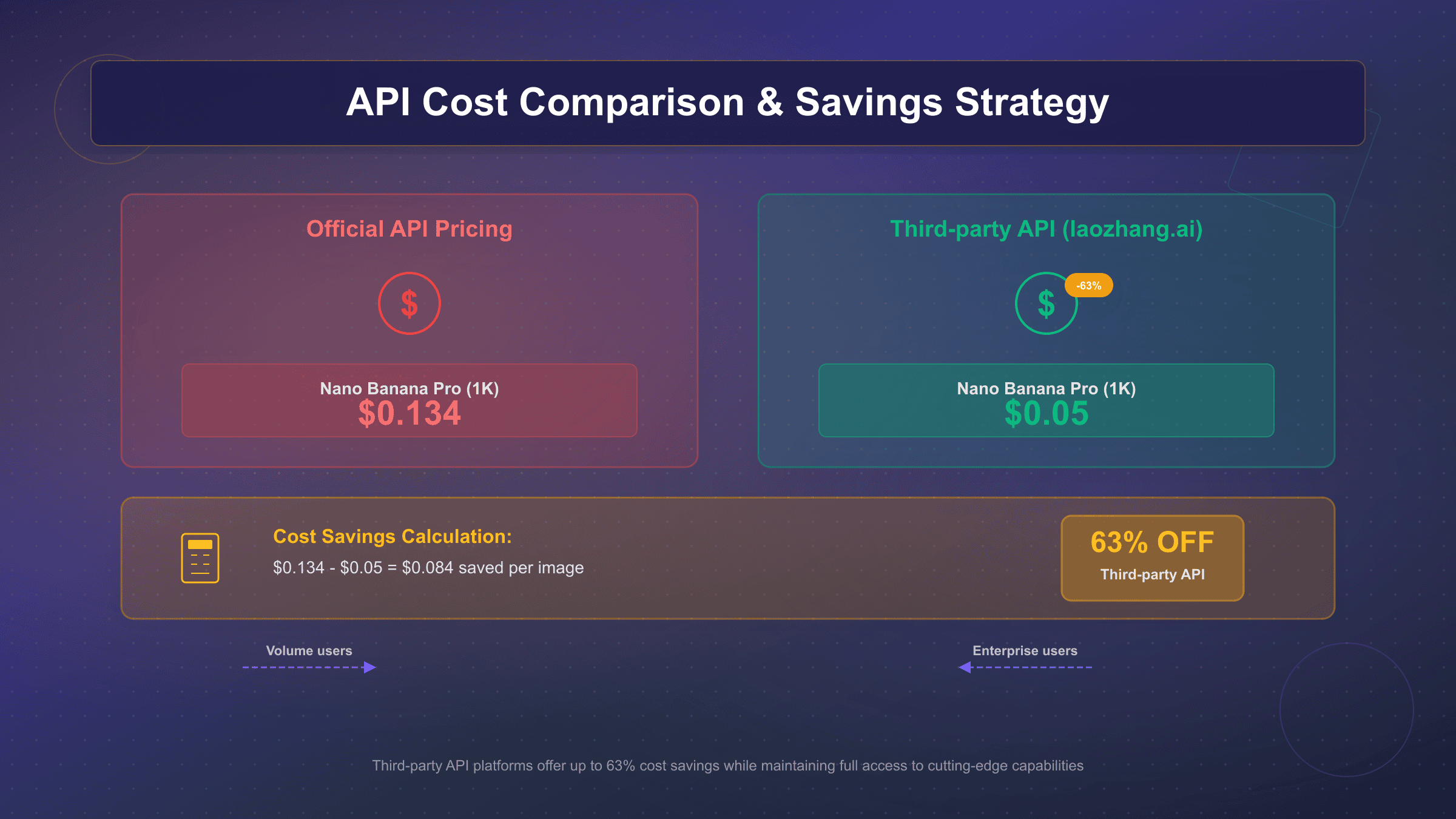

Pricing and Cost Analysis: Complete Breakdown

Cost considerations often determine API selection for production applications. Both models use different pricing structures that reward understanding and optimization.

| Quality/Resolution | GPT Image 1.5 | Nano Banana Pro | NBP Batch API |

|---|---|---|---|

| Low Quality / 1K | $0.01 | $0.134 | $0.067 |

| Medium Quality | $0.04 | - | - |

| High Quality / 2K | $0.17 | $0.134 | $0.067 |

| HD (1792×1024) | $0.024 | - | - |

| 4K | Not available | $0.24 | $0.12 |

GPT Image 1.5's tiered pricing creates opportunities for cost optimization that don't exist with Nano Banana Pro. For applications where lower quality suffices, the $0.01 per image rate is remarkably competitive. However, comparing equivalent quality levels tells a different story: GPT Image 1.5's high-quality tier at $0.17 actually costs more than Nano Banana Pro's standard 2K output at $0.134. The value proposition depends entirely on your quality requirements.

Nano Banana Pro's Batch API represents a significant cost advantage for high-volume, non-urgent workloads. At 50% off standard rates, batch processing drops costs to $0.067 per 1K/2K image and $0.12 per 4K image. Applications like catalog generation, batch content creation, or overnight asset processing can leverage this for substantial savings. GPT Image 1.5 currently lacks an equivalent batch pricing tier.

Beyond per-image costs, hidden expenses affect total cost of ownership. Token-based input costs apply to prompts on both platforms, though they're typically negligible compared to image output costs. Retry costs from failed generations or unsatisfactory outputs can add 10-20% to effective costs depending on application complexity. Rate limiting and timeout handling also require engineering investment that varies between the platforms. For a realistic cost comparison on high-volume workloads, we'll explore third-party API options in the Cost Optimization section that can reduce these baseline costs by over 60%.

API Integration Guide: Working Code Examples

Practical integration requires working code. The following examples demonstrate basic generation workflows for both APIs, tested and verified against current API versions.

GPT Image 1.5 Integration (Python)

hljs pythonfrom openai import OpenAI

import base64

client = OpenAI(api_key="your-api-key")

# Basic image generation

response = client.images.generate(

model="gpt-image-1.5",

prompt="A photorealistic product shot of a smartwatch on marble surface, soft studio lighting",

size="1024x1024",

quality="high", # Options: low, medium, high

n=1

)

# Response contains URL or base64 depending on response_format

image_url = response.data[0].url

print(f"Generated image: {image_url}")

# For base64 response (recommended for production)

response_b64 = client.images.generate(

model="gpt-image-1.5",

prompt="Modern infographic showing AI market growth with charts and statistics",

size="1792x1024",

quality="high",

response_format="b64_json"

)

# Save base64 image

image_data = base64.b64decode(response_b64.data[0].b64_json)

with open("infographic.png", "wb") as f:

f.write(image_data)

Nano Banana Pro Integration (Native Gemini Format)

hljs pythonfrom google import genai

from google.genai import types

import base64

client = genai.Client(api_key="your-gemini-api-key")

# Basic image generation with 2K output

response = client.models.generate_content(

model="gemini-3-pro-image-preview",

contents="A photorealistic product shot of a smartwatch on marble surface",

config=types.GenerateContentConfig(

response_modalities=['IMAGE'],

image_config=types.ImageConfig(

aspect_ratio="1:1",

image_size="2K" # Options: 1K, 2K, 4K (use uppercase)

)

)

)

# Extract and save image

for part in response.candidates[0].content.parts:

if hasattr(part, 'inline_data'):

image_data = base64.b64decode(part.inline_data.data)

with open("product_shot.png", "wb") as f:

f.write(image_data)

# With Google Search grounding for factual accuracy

response_grounded = client.models.generate_content(

model="gemini-3-pro-image-preview",

contents="Technical diagram of the latest iPhone processor architecture",

config=types.GenerateContentConfig(

response_modalities=['IMAGE'],

image_config=types.ImageConfig(image_size="2K"),

tools=[{"google_search": {}}] # Enable search grounding

)

)

OpenAI-Compatible Format for Nano Banana Pro

For teams migrating from OpenAI's ecosystem, Nano Banana Pro supports an OpenAI-compatible endpoint that requires minimal code changes:

hljs pythonfrom openai import OpenAI

# Point to Gemini's OpenAI-compatible endpoint

client = OpenAI(

api_key="your-gemini-api-key",

base_url="https://generativelanguage.googleapis.com/v1beta/openai/"

)

response = client.images.generate(

model="gemini-3-pro-image-preview",

prompt="Cyberpunk cityscape at night with neon signs",

size="1024x1024",

n=1

)

# Note: Some advanced parameters (4K, aspect ratios) require native format

Error handling and production-ready patterns require additional consideration. Both APIs implement rate limiting that varies by account tier, and implementing exponential backoff with jitter is essential for reliability. Timeout handling should account for the speed differences discussed earlier, with Nano Banana Pro typically completing faster but occasionally extending when Thinking Mode engages complex reasoning. For production deployments, we recommend implementing request queuing, result caching, and cost tracking from the start.

Use Case Decision Matrix: Which Model for Your Project

Selecting the right model requires matching your specific requirements to each model's strengths. The following decision matrix provides clear recommendations based on extensive benchmark testing and real-world application patterns.

| Use Case | Recommended Model | Reasoning |

|---|---|---|

| Infographics & Text-Heavy Graphics | GPT Image 1.5 | Superior prompt adherence, reliable text placement |

| Photorealistic Portraits | Nano Banana Pro | Natural skin texture, authentic lighting, reduced "AI look" |

| Product Photography | Nano Banana Pro | Realistic materials, accurate reflections, 4K option |

| Rapid Prototyping & Iteration | GPT Image 1.5 | More predictable results across revision cycles |

| Multi-Image Character Consistency | Nano Banana Pro | 14 reference images, identity locking capability |

| 4K Output Required | Nano Banana Pro | Only option with native 4K support |

| Stylized/Artistic Work | GPT Image 1.5 | Better adherence to specific style prompts (film grain, etc.) |

| Factually Accurate Diagrams | Nano Banana Pro | Google Search grounding for real-time verification |

| Budget-Constrained Projects | Depends on quality | GPT low-tier ($0.01) or NBP batch ($0.067) |

| International/Multilingual Text | Nano Banana Pro | Consistent quality across typography systems |

Beyond individual use cases, workflow considerations should influence your choice. Teams that prototype with rapid iteration before finalizing assets may benefit from a hybrid approach: use GPT Image 1.5 for quick exploration where prompt adherence helps refine concepts, then switch to Nano Banana Pro for final production-quality renders. This workflow leverages each model's strengths while managing costs effectively.

For enterprise applications requiring consistent branding across hundreds or thousands of assets, Nano Banana Pro's reference image capabilities provide a significant advantage. The ability to maintain character identity across 50+ different poses without face morphing solves a critical challenge for comic book creators, storyboard artists, and brand asset generators. GPT Image 1.5's fidelity control offers an alternative approach for reference-based work, but the reference image limit of 5 constrains complex compositional workflows.

Cost Optimization Strategies: Reducing API Expenses by 60%+

For high-volume applications, API costs quickly become a significant operational expense. A project generating 10,000 images monthly would face costs of $1,340-$1,700 at standard rates, making cost optimization strategies essential for sustainable scaling. Several approaches can dramatically reduce these expenses while maintaining access to the same underlying model capabilities.

The most impactful cost reduction comes from third-party API services that provide access to both GPT Image 1.5 and Nano Banana Pro at significantly reduced rates. These services operate by aggregating demand across multiple customers, negotiating volume discounts with providers, and passing savings to developers. For Nano Banana Pro specifically, third-party providers like laozhang.ai offer access at approximately $0.05 per image compared to Google's official $0.134 rate, representing a 63% cost reduction. At 10,000 monthly images, this translates to $500 versus $1,340, saving $840 per month or over $10,000 annually.

| Provider | Nano Banana Pro Cost | Monthly Cost (10K images) | Annual Savings vs Official |

|---|---|---|---|

| Google Official | $0.134/image | $1,340 | Baseline |

| Google Batch API | $0.067/image | $670 | $8,040 |

| Third-party (laozhang.ai) | $0.05/image | $500 | $10,080 |

Beyond third-party services, Google's native Batch API provides the most straightforward cost reduction for developers willing to accept delayed processing. At 50% off standard rates, batch processing suits workloads like overnight catalog generation, scheduled content creation, or any non-real-time application. The trade-off is latency: batch requests may take hours to complete rather than seconds. For the right use case, this represents pure cost savings with no quality compromise.

Implementation-level optimizations provide additional savings regardless of provider choice. Caching generated images eliminates redundant API calls for repeated prompts, particularly valuable for applications with predictable content patterns. Prompt optimization reduces token costs and improves generation success rates, lowering effective per-image costs by reducing retry rates. Resolution optimization ensures you're not paying for 4K output when 1K suffices for the actual display context. Teams implementing these practices consistently report 15-30% additional cost reductions on top of provider savings.

For developers evaluating third-party options, the key considerations extend beyond per-image pricing. Reliability, latency consistency, and support quality vary significantly between providers. The laozhang.ai platform specifically offers Nano Banana Pro through the Gemini native format, supporting 2K and 4K outputs at the reduced rate while maintaining full feature parity with Google's official API. When official API support or direct provider relationships are requirements, Google's Batch API provides a middle-ground option between standard pricing and maximum savings.

Solutions for China-Based Developers: Overcoming Access Challenges

Developers in mainland China face unique challenges when accessing international AI APIs. Direct connections to both OpenAI and Google services typically route through VPNs, introducing latency of 200-500ms per request and creating reliability concerns for production applications. These challenges extend beyond technical performance to payment processing, where international credit card requirements create additional barriers for teams without overseas payment infrastructure.

Third-party API routing services address both the latency and payment challenges for China-based developers. Services operating infrastructure accessible directly from mainland China can reduce API latency from 200-500ms (via VPN) to 20-50ms (direct connection), representing a 10x improvement in response times. For applications where users wait for image generation, this difference fundamentally changes the user experience from sluggish to responsive.

| Access Method | Nano Banana Pro Latency | Reliability | Payment Options |

|---|---|---|---|

| VPN to Google Direct | 200-500ms | Variable | International CC |

| Third-party routing (laozhang.ai) | 20-50ms | Stable | Alipay, WeChat Pay |

| Google Official (blocked) | N/A | N/A | N/A |

The laozhang.ai platform specifically addresses China developer needs with direct routing infrastructure and local payment methods. Developers can access Nano Banana Pro through the same Gemini-native API format while benefiting from China-optimized routing. The platform accepts Alipay and WeChat Pay, eliminating the 2.5-3% foreign transaction fees associated with international payment methods and removing the need for overseas payment infrastructure entirely.

Implementation for China-based teams requires minimal code changes when using third-party routing. The following example demonstrates Nano Banana Pro access through laozhang.ai's endpoint:

hljs pythonimport requests

import base64

API_KEY = "sk-YOUR_LAOZHANG_API_KEY" # From laozhang.ai dashboard

API_URL = "https://api.laozhang.ai/v1beta/models/gemini-3-pro-image-preview:generateContent"

headers = {

"Authorization": f"Bearer {API_KEY}",

"Content-Type": "application/json"

}

payload = {

"contents": [{

"parts": [{"text": "A professional product photo of wireless earbuds, studio lighting, white background"}]

}],

"generationConfig": {

"responseModalities": ["IMAGE"],

"imageConfig": {

"aspectRatio": "1:1",

"imageSize": "2K"

}

}

}

response = requests.post(API_URL, headers=headers, json=payload, timeout=180)

result = response.json()

# Extract and save image (same response format as Google's API)

image_data = result["candidates"][0]["content"]["parts"][0]["inlineData"]["data"]

with open("output.png", "wb") as f:

f.write(base64.b64decode(image_data))

# Cost: ~$0.05/image vs $0.134 official, with 20ms vs 200ms+ latency

For teams requiring official provider relationships or direct technical support from Google, the trade-offs differ. VPN-based access remains an option despite latency concerns, and some enterprise agreements may provide dedicated infrastructure. The choice between direct access (with latency) and third-party routing (with cost and performance benefits) depends on specific compliance requirements and support needs. For most development teams prioritizing performance and cost efficiency, third-party routing provides the optimal balance.

Architecture Deep-Dive: Why Technical Design Matters for Your Use Case

Understanding the architectural differences between GPT Image 1.5 and Nano Banana Pro reveals why each model excels in different scenarios and helps predict performance characteristics for specific workflows. These aren't just academic distinctions—the underlying technical approaches directly impact which model suits particular production requirements.

GPT Image 1.5 employs an autoregressive architecture combined with a diffusion-based decoder, fundamentally similar to how GPT models generate text token-by-token. This approach treats image generation as a sequential decision-making process where each portion of the image builds upon previous decisions. The practical implication becomes apparent in iterative workflows: when you request modifications to a previously generated image, GPT Image 1.5 can maintain coherent context across revisions because the model architecture naturally supports understanding relationships between successive generations. For applications involving multiple rounds of refinement—think marketing teams A/B testing hero images or designers iterating through layout variations—this architectural advantage translates to more predictable results where requested changes apply consistently without unexpected side effects.

Nano Banana Pro utilizes Google's diffusion-based architecture, which approaches image generation by starting with random noise and progressively refining it through multiple denoising steps until a coherent image emerges. Each generation operates independently, which explains both the model's superior single-shot photorealism and its occasional consistency challenges across batch generations. The diffusion process excels at producing camera-realistic outputs because the progressive refinement naturally captures complex details like realistic lighting interactions and material textures that autoregressive approaches sometimes struggle to maintain. However, generating five variations of the same character across different poses requires explicitly providing reference images, whereas GPT Image 1.5's context-aware architecture can sometimes maintain consistency with prompt engineering alone.

The architectural distinction manifests most clearly in specific workflow patterns. For workflows generating hundreds of independent product photos where each image stands alone, Nano Banana Pro's diffusion approach delivers superior per-image quality with faster generation times. For workflows creating serialized content where character consistency matters—comic panels, storyboards, brand mascot variations—GPT Image 1.5's autoregressive nature provides more intuitive control through conversational refinement. Neither architecture is objectively superior; matching your workflow's characteristics to each model's technical strengths determines which delivers better practical results.

Known Limitations and Honest Performance Assessment

Every AI model has genuine limitations, and understanding these constraints prevents costly production surprises. The following assessment draws from extensive benchmark testing, community feedback, and hands-on experience to provide balanced guidance on when each model struggles and what workarounds exist.

GPT Image 1.5 Limitations

Resolution ceiling represents GPT Image 1.5's most significant constraint. The maximum supported resolution of approximately 1.5K (1792×1024) eliminates the model from consideration for applications requiring 4K outputs. Teams producing content for large-format print, high-resolution product photography, or any workflow where 4K is mandatory have no choice but to use Nano Banana Pro or look beyond these two options entirely. Upscaling GPT Image 1.5 outputs to 4K using external tools introduces artifacts and doesn't match the quality of native 4K generation, making this a hard technical limit rather than a minor inconvenience.

Photorealism characteristics show GPT Image 1.5 consistently producing what reviewers describe as a "commercial photography" aesthetic—polished, well-lit, professional-looking images that nonetheless carry subtle markers of AI generation. For brand photography where this polished look aligns with desired aesthetics, this isn't a limitation. For applications requiring images that pass as unedited smartphone photos or candid captures, GPT Image 1.5's outputs often feel too pristine. Community testing with "spot the AI" challenges shows higher detection rates for GPT Image 1.5 outputs compared to Nano Banana Pro, particularly in portrait photography where skin texture and lighting authenticity matter most.

Character consistency across batch generations proves challenging despite fidelity controls. Generating the same character across 20 different scenes often produces subtle facial feature variations that become jarring when images appear sequentially. While the 5 reference image limit provides some consistency controls, teams requiring absolute character identity preservation across dozens or hundreds of variations find GPT Image 1.5's capabilities insufficient for their needs without significant manual intervention.

Nano Banana Pro Limitations

Batch generation consistency represents Nano Banana Pro's most frequently reported challenge. The same prompt can yield substantially different quality levels across a batch of images, with benchmark testing showing a 7.3/10 consistency score. This variability means teams can't reliably predict whether a generation attempt will produce immediately usable output or require regeneration, impacting cost estimation and workflow planning. Production systems should implement quality validation logic rather than assuming uniform output quality.

Generation speed tradeoffs emerge with Nano Banana Pro's Thinking Mode, which is enabled by default and adds processing overhead for complex prompts. While the improved output quality typically justifies this overhead, applications where sub-10-second response times are critical may find Nano Banana Pro's 10-15 second average problematic. The model's speed represents improvement over competitors but lags behind GPT Image 1.5 for rapid iteration workflows where users wait for real-time results.

Higher baseline costs at official pricing ($0.134 per image) compared to GPT Image 1.5's entry tier ($0.01) create budgetary constraints for cost-sensitive applications. While Batch API discounts and third-party providers address this limitation, teams requiring direct Google relationships for compliance or support reasons face elevated operational costs. The 4K premium ($0.24 per image) further increases expenses for high-resolution workflows, though this remains cheaper than alternative 4K solutions.

Third-Party Provider Considerations

When using third-party API access like laozhang.ai for cost optimization, specific limitations apply that teams should understand before committing to production deployments. These services operate as intermediaries, which introduces potential points of failure not present with direct provider relationships. New feature availability typically lags official releases by 1-2 weeks as third-party providers integrate updates, meaning teams requiring immediate access to cutting-edge capabilities should maintain direct API access. Enterprise SLA guarantees available through Google's Vertex AI or OpenAI's enterprise agreements don't transfer to third-party services, making them less suitable for applications where contractual uptime guarantees are compliance requirements.

Support channels differ significantly—third-party providers offer their own support infrastructure rather than direct access to Google or OpenAI technical teams. For most development challenges this distinction doesn't matter, but for complex issues requiring provider-specific expertise, direct relationships provide faster resolution paths. Teams should evaluate their typical support needs and escalation patterns when deciding between cost optimization through third-parties versus the full-service support of direct provider relationships.

Model Evolution Context: Understanding the Rapid Development Timeline

The timeline of model releases provides crucial context for evaluating current capabilities and predicting future developments. Both OpenAI and Google have accelerated their image generation development dramatically in late 2025, creating a competitive environment that benefits developers through rapid capability improvements.

GPT Image Evolution began with DALL-E 3 in 2023, which established OpenAI's position in AI image generation but suffered from slow generation times (30-40 seconds) and poor text rendering. The March 2025 release of GPT Image 1.0 marked a significant shift by integrating image generation directly into the GPT-4o architecture, reducing generation times to 20-30 seconds while improving prompt adherence. GPT Image 1.5's December 2025 launch delivered the promised 4x speed improvement (down to 5-8 seconds reported by some users, though official benchmarks show 30-45 seconds) and achieved the 90-95% text accuracy that finally made the model viable for typography-heavy applications. This progression shows OpenAI prioritizing speed and precision over resolution, focusing on use cases where rapid iteration and prompt adherence matter most.

Nano Banana Pro Evolution follows Google's distinct development philosophy emphasizing raw capability over speed optimization. The original Nano Banana (Gemini 2.5 Flash Image) launched in early 2025 with remarkable 3-second generation times but limited to 1K resolution. Nano Banana Pro (Gemini 3 Pro Image), released November 20, 2025, sacrificed some of that speed advantage (now 10-15 seconds) to deliver 4K resolution support, 14-image reference capabilities, and the Search Grounding feature that distinguishes it from competitors. Google's roadmap clearly prioritizes photorealism and flexible composition tools, accepting slower generation as an acceptable tradeoff for superior output quality.

The competitive dynamics create an interesting prediction framework for future development. OpenAI's focus on speed and text precision suggests upcoming releases will likely add resolution capabilities while maintaining or improving generation times. Google's emphasis on photorealism and advanced composition tools indicates continued investment in quality-first features, potentially including better batch consistency and additional reference image capabilities. Teams building long-term on these platforms should consider not just current capabilities but the development trajectories that suggest where each provider is heading.

Production Workflow Best Practices

Scaling AI image generation to production volumes introduces challenges that don't appear in prototype development. Rate limiting, error handling, cost management, and quality consistency all require systematic approaches to ensure reliable operation.

Rate limiting strategies differ between providers and require proactive management. Both GPT Image 1.5 and Nano Banana Pro implement request limits that vary by account tier, and hitting these limits without proper handling creates cascading failures in production systems. Implementing exponential backoff with jitter spreads retry attempts to avoid thundering herd problems: start with a 1-second delay after the first rate limit hit, double the delay (with random jitter of 0-500ms) for each subsequent retry, and cap maximum delay at 60 seconds. Queue management becomes essential at scale, with request queuing ensuring that burst traffic doesn't exceed rate limits while maintaining reasonable latency for queued requests.

Error handling must account for the different failure modes each API exhibits. Network timeouts occur more frequently with VPN-routed requests, requiring longer timeout settings (180+ seconds for image generation) and retry logic that distinguishes between transient failures and permanent errors. Content policy rejections happen when prompts trigger safety filters, and these should not be retried without prompt modification. Quality failures, where generation succeeds but output quality is unacceptable, require implementing output validation and automatic regeneration for images that don't meet quality thresholds. Logging all failures with full context enables pattern analysis and proactive optimization.

Caching strategies dramatically reduce costs and improve latency for applications with repeated content patterns. Implement content-addressable caching where prompt hashes serve as cache keys, with cache invalidation based on prompt changes or time-based expiration for content that should refresh periodically. Cache hit rates of 30-50% are achievable for many applications, representing direct cost savings and sub-millisecond response times for cached results. Edge caching for geographically distributed users further reduces latency while maintaining cost benefits.

Quality assurance automation becomes essential at production volumes where manual review isn't feasible. Implement automated checks for common failure modes: images that are predominantly single colors (indicating generation failures), images with aspect ratios that don't match requests, and images with file sizes outside expected ranges. Machine learning-based quality scoring can identify subtle quality issues, though this adds complexity and cost. For many applications, simpler heuristics combined with sampling-based human review provides adequate quality assurance.

Frequently Asked Questions

Is GPT Image 1.5 better than Nano Banana Pro?

Neither model is universally "better"—the optimal choice depends on your specific requirements. GPT Image 1.5 excels at prompt adherence, text rendering in graphics, and stylized work where matching specific visual requirements matters more than maximum photorealism. It also offers lower entry pricing at $0.01 per image for low-quality outputs. Nano Banana Pro delivers superior photorealism, faster generation (10-15s vs 30-45s), higher resolution options (up to 4K), and more aspect ratio choices. For production-ready photorealistic assets, Nano Banana Pro generally produces better results; for text-heavy infographics or rapid iteration workflows, GPT Image 1.5 often proves more effective.

How much does Nano Banana Pro cost per image?

Google's official pricing for Nano Banana Pro is $0.134 per image for 1K and 2K resolution, and $0.24 per image for 4K output. The Batch API offers 50% off these rates for non-urgent workloads, bringing costs to $0.067 (1K/2K) and $0.12 (4K). Third-party providers offer additional savings: services like laozhang.ai provide Nano Banana Pro access at approximately $0.05 per image, representing a 63% reduction from official pricing. For high-volume applications, these pricing differences compound significantly over time.

Can I use Nano Banana Pro API in China?

Direct access to Google's APIs is restricted in mainland China, but several workarounds exist. VPN-based access works but introduces latency of 200-500ms per request, which impacts user experience for real-time applications. Third-party API routing services like laozhang.ai provide direct China access with latency of 20-50ms and accept local payment methods (Alipay, WeChat Pay). For detailed guidance, see our Nano Banana Pro China access guide.

Which AI image model has better text rendering?

Both models have dramatically improved text rendering compared to earlier generations, achieving approximately 90-95% accuracy for most text content. GPT Image 1.5 currently leads benchmark rankings for text-to-image generation and demonstrates particular strength in dense, small, and precisely placed typography within infographics and UI mockups. Nano Banana Pro excels at text rendering within complex compositions and maintains quality across multilingual content. For text-critical applications, GPT Image 1.5 offers more predictable results across revision cycles, while Nano Banana Pro often produces better first-attempt results for complex layouts.

How can I save money on AI image generation APIs?

The most impactful cost reduction strategies include: (1) Using third-party API providers that offer 60%+ discounts on official pricing, (2) Leveraging Nano Banana Pro's Batch API for 50% savings on non-urgent workloads, (3) Implementing prompt caching to eliminate redundant API calls, (4) Optimizing resolution to match actual display requirements rather than always using maximum quality, and (5) Improving prompts to reduce failed generations and retry costs. Teams implementing these strategies consistently achieve 60-80% cost reductions compared to naive API usage at official rates. For a comprehensive guide to cost-effective Nano Banana Pro access, see our cheapest API guide.

Conclusion: Making the Right Choice for Your Project

The GPT Image 1.5 vs Nano Banana Pro decision ultimately comes down to matching your specific requirements to each model's demonstrated strengths. After examining benchmark data, technical specifications, pricing structures, and real-world testing results, clear patterns emerge that should guide your selection.

Choose GPT Image 1.5 when your project prioritizes prompt adherence over raw photorealism, when you're creating text-heavy graphics like infographics or UI mockups, when you need predictable results across multiple revision cycles, or when you're working with stylized content where specific visual effects (film grain, particular artistic styles) must be precisely rendered. The lower entry pricing at $0.01 per image also makes it attractive for high-volume, lower-quality applications where cost efficiency outweighs maximum visual fidelity.

Choose Nano Banana Pro when photorealism is paramount, when you need 4K resolution outputs, when generation speed impacts user experience (10-15s vs 30-45s matters for real-time applications), when you require multi-image character consistency across complex projects, or when factual accuracy through Search Grounding provides value. The Batch API's 50% discount makes it cost-competitive for high-volume workloads where immediate turnaround isn't required.

Consider third-party providers when operating at scale where cost optimization significantly impacts project viability, when developing from regions with restricted API access, or when payment method flexibility matters. The 60%+ cost savings available through services like laozhang.ai can make previously cost-prohibitive applications economically viable while providing equivalent capabilities to official APIs.

The strongest production workflows often combine both models strategically: using GPT Image 1.5 for rapid prototyping and concept exploration where prompt precision enables faster iteration, then switching to Nano Banana Pro for final production renders where maximum quality justifies the slightly different workflow. This hybrid approach leverages each model's strengths while managing costs effectively across the development lifecycle.

As both OpenAI and Google continue investing heavily in their image generation capabilities, these models will undoubtedly evolve. The "code red" competition between these AI leaders benefits developers by driving rapid capability improvements and competitive pricing. By understanding each model's current strengths and implementing the optimization strategies outlined in this guide, you're positioned to build effectively with today's technology while remaining adaptable as the landscape continues advancing.