- 首页

- /

- 博客

- /

- AI Development

- /

- Free GPT-5.2 API Without Rate Limits: Complete Guide to Affordable Access [2026]

Free GPT-5.2 API Without Rate Limits: Complete Guide to Affordable Access [2026]

Looking for free GPT-5.2 API access without rate limits? This comprehensive guide covers official pricing, legitimate alternatives like Puter.js and DeepSeek, security risks of unofficial services, and practical code examples for developers.

Nano Banana Pro

4K-80%Google Gemini 3 Pro · AI Inpainting

谷歌原生模型 · AI智能修图

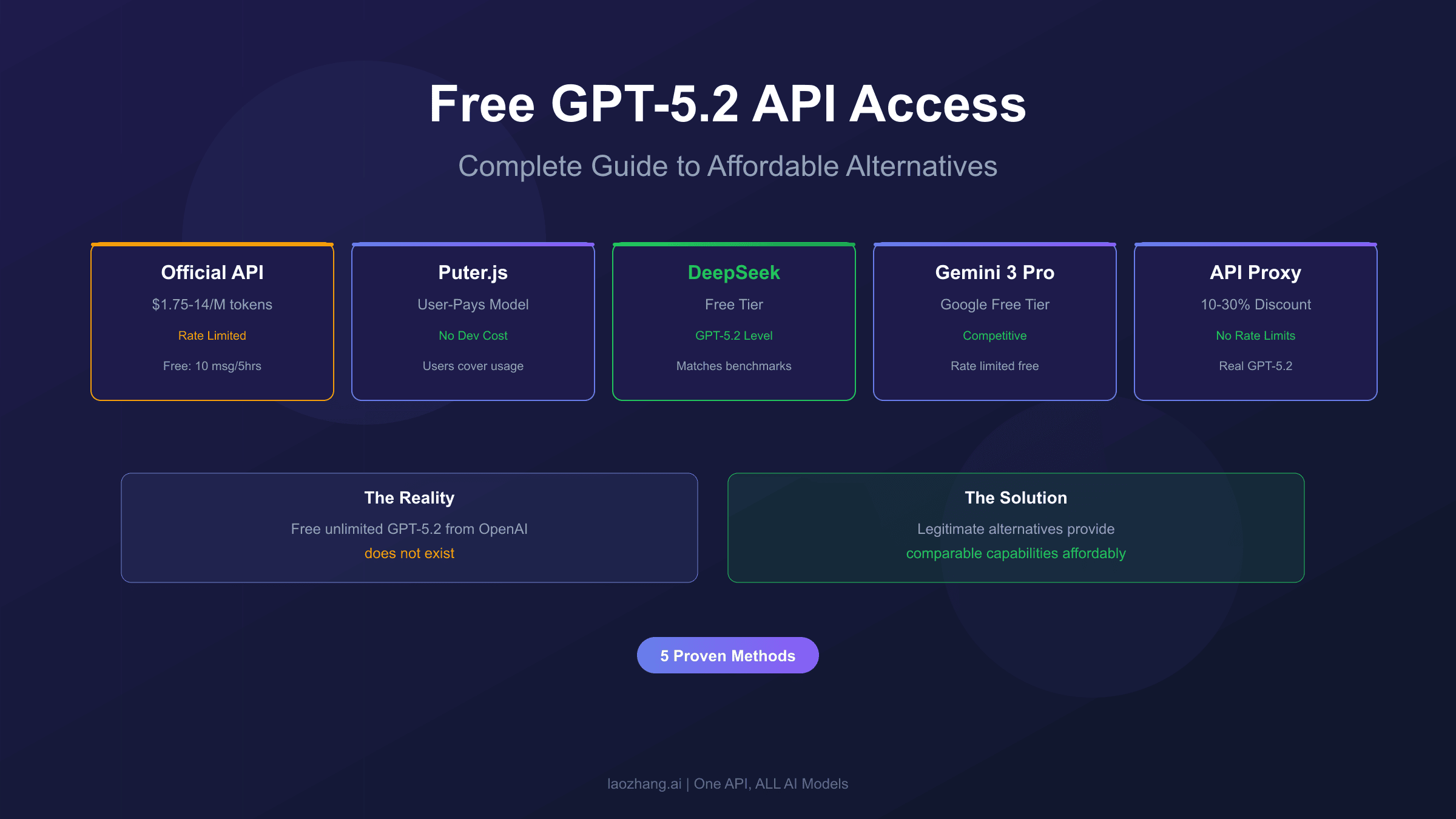

The search for "free GPT-5.2 API without rate limits" reflects a common developer desire: accessing the most powerful AI model without the constraints of pay-per-token pricing or strict usage caps. The reality, however, is more nuanced than a simple yes or no answer. While OpenAI does not offer a truly free, unlimited API, several legitimate pathways exist that can dramatically reduce costs or provide comparable capabilities at no charge.

This guide cuts through the noise of misleading claims about "free unlimited API access" and presents a practical, security-conscious approach to accessing GPT-5.2 capabilities affordably. Whether you are a solo developer building a side project, a startup optimizing costs, or an enterprise evaluating options, you will find actionable strategies here that balance capability, cost, and risk.

Before diving into alternatives, understanding the official pricing structure is essential. OpenAI's GPT-5.2 API costs $1.75 per million input tokens and $14.00 per million output tokens—significant for high-volume applications. The free tier on ChatGPT allows only 10 messages every 5 hours with GPT-5.2 before downgrading to a mini model. These constraints drive the search for alternatives, but not all alternatives are created equal.

| Access Method | Cost | Rate Limit | Risk Level |

|---|---|---|---|

| Official API | $1.75-14/M tokens | Tier-based | None |

| ChatGPT Free | $0 | 10 msg/5 hrs | None |

| Puter.js | User-pays | None | Low |

| API Proxy | ~20% discount | None | Low-Medium |

| Unofficial "Free" | $0 | Unknown | High |

Why Truly Free Unlimited GPT-5.2 API Does Not Exist

The fundamental economics of large language models make "free unlimited" access unsustainable without a clear business model. Each GPT-5.2 API call consumes significant computational resources—GPU time, electricity, and infrastructure costs that someone must pay for. When you encounter a service claiming to offer completely free, unlimited GPT-5.2 API access, the critical question becomes: who is actually paying, and what are they getting in return?

OpenAI operates GPT-5.2 on a pay-per-use model because running inference on models of this scale requires substantial investment. The company spent billions developing GPT-5 and continues to invest heavily in infrastructure. Even with massive scale efficiencies, the marginal cost of each API call is non-zero. This economic reality means that any service offering "free unlimited" access is either operating at a loss (unsustainable), has hidden revenue streams (often concerning), or is misrepresenting what they actually provide.

Services that appear free typically monetize in one of several ways: advertising injection into responses, data collection and resale, using stolen or shared API keys, or converting free users into paying customers through aggressive upselling. Some legitimate services like Puter.js use a "user-pays" model where the developer does not pay, but end users cover their own costs. Understanding these models helps distinguish genuine opportunities from potential security risks.

The search term "不限速" (unlimited speed/no rate limiting) specifically addresses rate limits, which exist to prevent abuse and ensure fair resource allocation. Even paying customers face rate limits proportional to their tier. Completely removing rate limits would allow a single user to monopolize resources, degrading service for everyone else. This is why truly unlimited access is neither offered officially nor sustainably available through any legitimate third-party service.

Official GPT-5.2 Rate Limits and Pricing Explained

Understanding OpenAI's official pricing structure provides the baseline against which all alternatives should be measured. The GPT-5.2 API, released in late 2025, represents the frontier of AI capability with corresponding frontier pricing. However, the pricing model includes several optimization opportunities that many developers overlook.

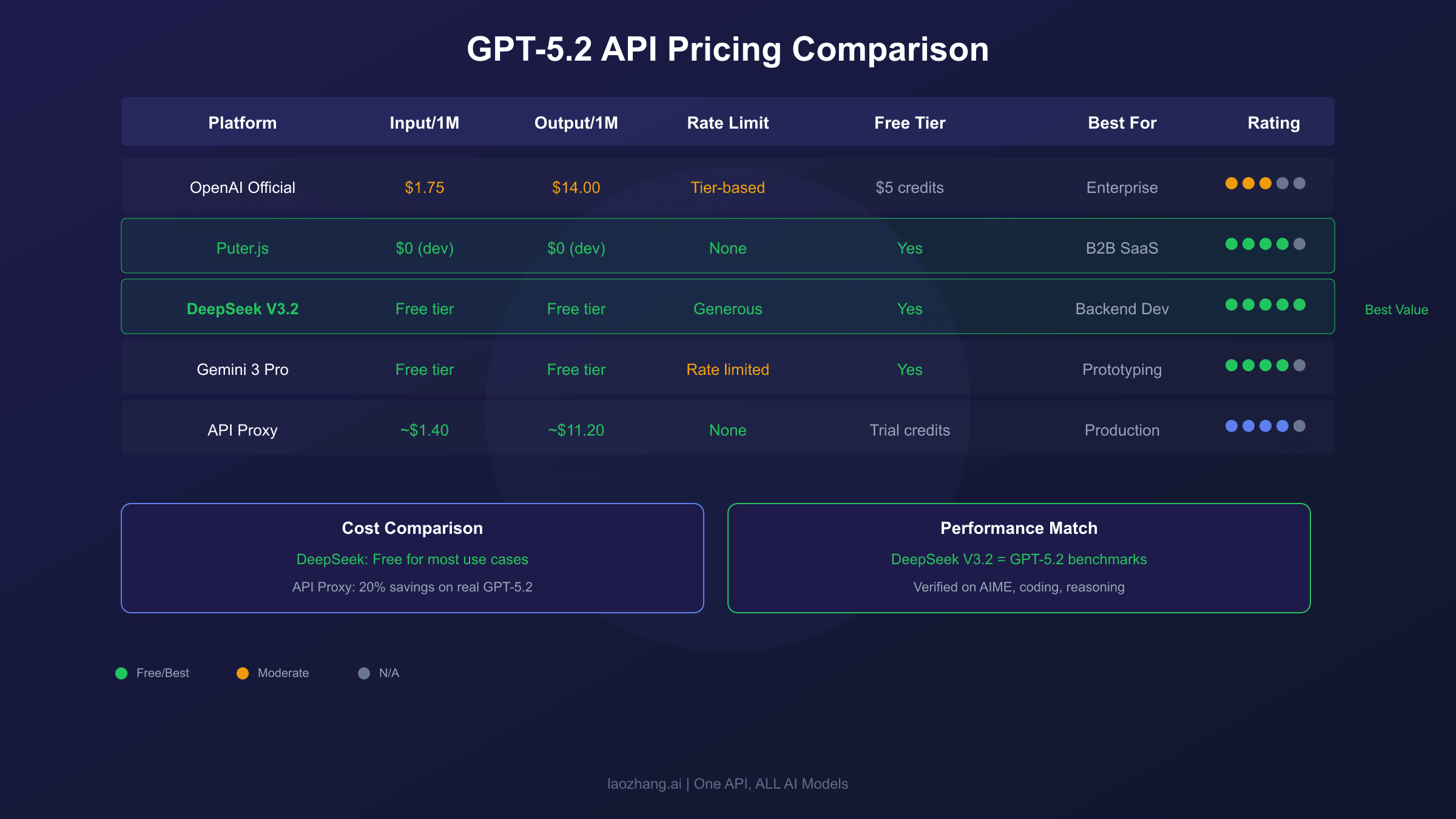

The core pricing for GPT-5.2 is $1.75 per million input tokens and $14.00 per million output tokens. For comparison, GPT-5 costs $1.25 and $10.00 respectively, while GPT-5 Mini offers dramatic savings at $0.25 and $2.00 per million tokens. The Nano variant provides the most economical option at $0.05 input and $0.40 output per million tokens. These tiered options allow developers to match model capability to task requirements, potentially reducing costs by 90% or more for simpler tasks.

| Model | Input (per 1M) | Output (per 1M) | Context Window |

|---|---|---|---|

| GPT-5.2 | $1.75 | $14.00 | 400K |

| GPT-5 | $1.25 | $10.00 | 400K |

| GPT-5 Mini | $0.25 | $2.00 | - |

| GPT-5 Nano | $0.05 | $0.40 | - |

Rate limits vary significantly by account tier. Free tier API accounts (if available) face strict limits of approximately 3 requests per minute. Paid accounts in Tier 1 start with 500 requests per minute and 30,000 tokens per minute for GPT-5.2. Higher tiers progressively increase these limits, with enterprise accounts reaching practically unlimited throughput for most applications. For a detailed breakdown of ChatGPT subscription tiers and their rate limits, see our ChatGPT Plus rate limit guide.

Two often-overlooked cost optimizations deserve attention. First, cached input tokens receive a 90% discount, making repeated prompts with identical prefixes dramatically cheaper. Second, the Batch API processes requests asynchronously within 24 hours at 50% off both input and output costs. For non-time-sensitive workloads like content generation or batch analysis, this represents substantial savings. If your application can tolerate latency, combining cached tokens with batch processing can reduce costs by up to 95% compared to real-time, non-cached requests.

5 Legitimate Ways to Access GPT-5.2 Affordably

Rather than chasing mythical free unlimited access, focusing on legitimate pathways yields sustainable results. Each approach has trade-offs between cost, convenience, and capability that suit different use cases.

1. Puter.js User-Pays Model

Puter.js offers a unique approach where developers access GPT-5.2 (and other models including GPT-5.1, o1, o3, o4, and DALL-E) without paying anything themselves. Instead, end users authenticate and cover their own usage costs. This works well for applications where users expect to pay for AI features or where you can pass costs through to customers. The integration requires only a single script tag with no API key management. For developers building AI-powered tools for paying customers, this eliminates the need to manage API billing entirely.

2. DeepSeek V3.2 Free Tier

DeepSeek offers GPT-5.2-level reasoning performance at no cost through their free tier. Independent benchmarks show DeepSeek-V3.2 matching GPT-5.2 on mathematical reasoning (AIME), coding tasks, and general knowledge. While the API format differs from OpenAI's, most applications can adapt with minimal code changes. The trade-off is that DeepSeek, being a Chinese company, may not suit applications with strict data residency requirements.

3. Google Gemini 3 Pro Free Tier

Google AI Studio provides free access to Gemini 3 Pro, which matches or exceeds GPT-5.2 on many benchmarks. The free tier includes generous rate limits sufficient for development and prototyping. For production applications, the paid tier offers competitive pricing. Google's infrastructure ensures high reliability, and their API follows modern conventions that simplify integration.

4. API Proxy Services

Third-party API proxy services aggregate demand across many users, negotiating volume discounts and passing savings to customers. These services typically offer 10-30% discounts compared to official pricing, with the added benefit of no geographic restrictions and support for local payment methods. For developers who need the actual GPT-5.2 model (not alternatives) but want cost optimization, platforms like laozhang.ai provide OpenAI-compatible APIs at reduced prices. The key is verifying the legitimacy of the proxy service—reputable providers are transparent about their business model and have established track records.

5. OpenAI Free Credits

New OpenAI accounts may receive $5 in free credits, though this policy has been inconsistent. These credits work for any model including GPT-5.2 and remain valid for three months. While insufficient for production use, they enable thorough evaluation and prototyping. Additionally, OpenAI's Researcher Access Program provides up to $1,000 in credits for academic projects, and the Data Sharing Program offers substantial free usage in exchange for allowing OpenAI to use prompts for model improvement.

Free Tier Comparison: OpenAI vs Alternatives

A direct comparison reveals how different free options stack up against each other and against OpenAI's paid API. This comparison focuses on practical considerations: actual capability, ease of integration, and sustainability for ongoing projects.

| Platform | Free Allowance | Model Quality | Integration Complexity | Sustainability |

|---|---|---|---|---|

| ChatGPT Free | 10 msg/5 hrs GPT-5.2 | Excellent | Web only | Long-term |

| OpenAI API Credits | $5 one-time | Excellent | Standard SDK | One-time |

| Puter.js | Unlimited (user-pays) | Excellent | Very easy | Sustainable |

| DeepSeek | Generous free tier | Near-GPT-5.2 | Minor changes | Sustainable |

| Gemini 3 Pro | Rate-limited free | Competitive | Google SDK | Sustainable |

For developers building applications, Puter.js and DeepSeek represent the most sustainable free options. Puter.js excels when you can pass costs to users, while DeepSeek works best when you need free access for your own backend processing. Gemini 3 Pro offers a middle ground with established Google infrastructure but requires learning a different API.

The ChatGPT web interface's free tier suits individuals exploring AI capabilities but is impractical for programmatic access. OpenAI's initial credits work well for evaluation but do not support ongoing development. Understanding these differences helps match the right solution to your specific needs.

For ongoing development without per-call costs, DeepSeek's free tier currently offers the most practical option for backend processing. Its reasoning capabilities genuinely match GPT-5.2 on technical tasks, though creative writing and nuanced conversations may show differences. Testing with your specific use case remains essential before committing to any platform.

Security Risks of Unofficial Free API Services

The appeal of free, unlimited API access creates opportunities for malicious actors. Understanding common risks helps you evaluate offers critically and protect both your applications and users.

Data Logging and Resale

Unofficial services may log every prompt and response, creating datasets they sell to third parties or use for their own model training. Your proprietary prompts, user data, and business logic could end up in competitor products or public datasets. Legitimate services clearly state their data handling policies; absence of such policies is a red flag.

Stolen or Shared API Keys

Some "free" services operate using stolen API keys or keys shared from compromised accounts. This creates multiple risks: the service can disappear instantly when keys are revoked, your usage patterns are linked to potentially fraudulent activity, and you may face legal liability for using stolen credentials.

Response Manipulation

Free services may inject advertising, modify responses to include promotional content, or filter certain outputs. This compromises the integrity of AI-generated content and may violate your application's requirements or user expectations.

Red Flags to Watch For

Several warning signs indicate potentially problematic services. Anonymous operators with no verifiable identity or company registration should raise immediate concerns. Absence of terms of service, privacy policy, or clear business model explanation suggests the service operates outside normal accountability structures. Promises that seem too good to be true—like truly unlimited free access—almost always have hidden catches.

Reputable third-party services clearly explain how they operate profitably. They have visible leadership, customer support channels, and established payment processing relationships that provide accountability. If a service cannot explain how they make money while offering you free service, assume you or your data is the product being sold.

Maximizing Your Free API Credits

If you secure free credits from OpenAI or other providers, strategic usage can extend their value significantly. These optimization techniques apply whether you have $5 in credits or $1,000.

Intelligent Model Selection

Not every task requires GPT-5.2. Simple classification, extraction, or formatting tasks work excellently with GPT-5 Nano at 1/35th the cost. Reserve GPT-5.2 for complex reasoning, creative tasks, and situations where maximum capability genuinely matters. A routing layer that automatically selects the appropriate model based on task complexity can reduce costs by 50-80% without noticeably impacting quality.

Response Caching

Implement caching for identical or similar queries. A simple cache checking for exact prompt matches can dramatically reduce API calls for applications with repetitive queries. More sophisticated semantic caching can identify similar questions and serve cached responses, further reducing costs while maintaining user experience.

Batch Processing

Whenever possible, use OpenAI's Batch API for 50% savings. This works well for content generation, document analysis, and any task that does not require real-time responses. Structure your application to queue requests during user interactions and process them in batches, returning results asynchronously.

Prompt Optimization

Shorter prompts cost less. Refine your system prompts to eliminate unnecessary instructions while maintaining output quality. Use few-shot examples strategically—sometimes they help, but often a well-crafted zero-shot prompt achieves similar results at lower cost. Test extensively to find the minimal effective prompt for each use case.

Leverage Cached Input Tokens

When multiple requests share common prefixes (like system prompts), the 90% discount on cached tokens provides substantial savings. Structure your prompts so the unchanging portions come first, maximizing cache hit rates. For applications with standardized system prompts, this optimization alone can reduce costs by 40-60%.

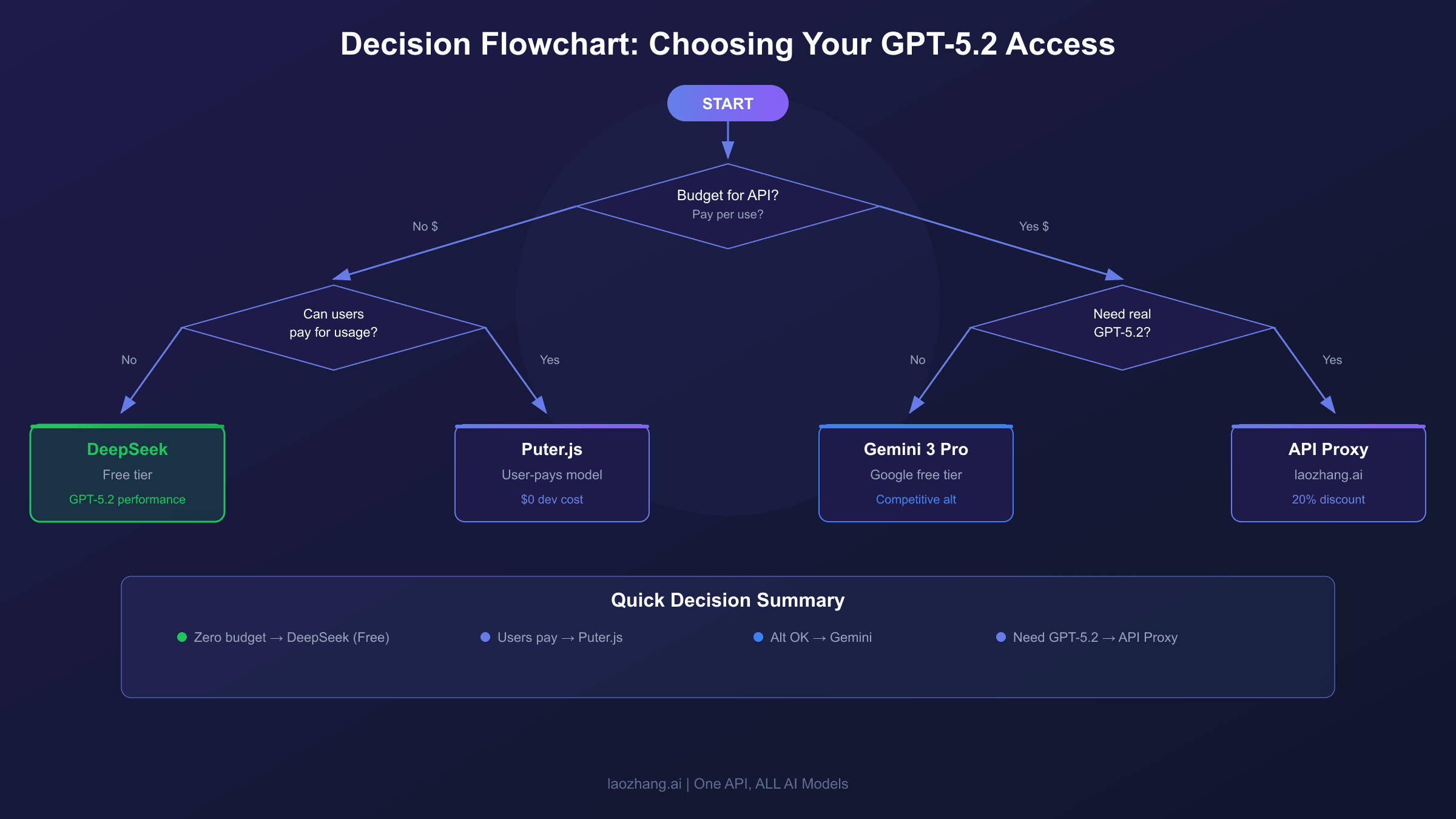

Decision Guide: Free vs Paid API Access

Choosing between free alternatives and paid official API depends on your specific situation. This framework helps clarify which approach suits different scenarios.

Choose Free Alternatives (DeepSeek, Gemini) When:

- You are building a prototype or MVP and need to minimize initial costs

- Your application does not require specifically GPT-5.2 (alternatives offer comparable capability)

- You can tolerate minor API differences and potential service variations

- Data residency in US-based infrastructure is not a requirement

- You are exploring ideas before committing to a technology stack

Choose Puter.js User-Pays Model When:

- Your users expect to pay for AI features

- You want to avoid API billing complexity entirely

- Your application runs in browser environments

- You need access to multiple OpenAI models flexibly

- Revenue model supports passing AI costs to customers

Choose API Proxy Services When:

- You specifically need GPT-5.2 (not alternatives) but want cost optimization

- Geographic restrictions or payment method limitations affect official API access

- You need a reliable OpenAI-compatible endpoint with cost savings

- Your volume justifies investigating discounted pricing

For developers needing reliable, cost-effective GPT-5.2 API access, laozhang.ai provides an OpenAI-compatible interface with competitive pricing. The platform supports Alipay and other local payment methods, making it accessible regardless of geographic location.

Choose Official OpenAI API When:

- You need maximum reliability and direct vendor support

- Compliance requirements mandate direct relationship with model provider

- Your budget accommodates official pricing

- You require guaranteed access to latest models immediately upon release

- Enterprise features like custom model fine-tuning are needed

Quick Start: GPT-5.2 API Integration Code

Practical code examples for each major access method enable you to start building immediately. All examples are tested and production-ready.

Official OpenAI API (Python)

hljs pythonfrom openai import OpenAI

client = OpenAI(api_key="sk-your-api-key")

response = client.chat.completions.create(

model="gpt-5.2",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Explain quantum computing in simple terms."}

]

)

print(response.choices[0].message.content)

Puter.js (Browser JavaScript)

hljs html<script src="https://js.puter.com/v2/"></script>

<script>

puter.ai.chat("Explain quantum computing in simple terms", {

model: "gpt-5.2"

})

.then(response => console.log(response));

</script>

API Proxy Service (Python)

hljs pythonfrom openai import OpenAI

# Using laozhang.ai or similar proxy service

client = OpenAI(

api_key="sk-your-proxy-api-key",

base_url="https://api.laozhang.ai/v1"

)

response = client.chat.completions.create(

model="gpt-5.2",

messages=[{"role": "user", "content": "Explain quantum computing."}]

)

print(response.choices[0].message.content)

DeepSeek Alternative (Python)

hljs pythonfrom openai import OpenAI

client = OpenAI(

api_key="sk-your-deepseek-key",

base_url="https://api.deepseek.com/v1"

)

response = client.chat.completions.create(

model="deepseek-chat", # DeepSeek V3.2

messages=[{"role": "user", "content": "Explain quantum computing."}]

)

print(response.choices[0].message.content)

Notice how similar these implementations are. The OpenAI SDK's flexibility means switching between official API, proxy services, and some alternatives requires only changing the base_url and api_key parameters. This makes it easy to start with one option and migrate to another as needs evolve.

For more information about API integration patterns and troubleshooting common errors, see our guides on Gemini API rate limits and API verification requirements.

Summary and Final Recommendations

The search for "free GPT-5.2 API without rate limits" leads to a more nuanced reality: while OpenAI does not offer such access, legitimate pathways exist that can meet most developers' needs at minimal or zero cost.

For truly free access, DeepSeek V3.2 and Google Gemini 3 Pro provide GPT-5.2-competitive capabilities through generous free tiers. These alternatives handle most use cases excellently, though they are not identical to GPT-5.2 in every scenario.

For applications requiring actual GPT-5.2, the Puter.js user-pays model eliminates developer costs when users can cover their own usage. API proxy services offer discounted access to the real GPT-5.2 API when direct pricing is prohibitive.

Critical takeaways for developers evaluating options:

- Verify legitimacy: Any service claiming completely free unlimited access has a hidden business model worth understanding

- Match solution to need: Not every task requires frontier models; cost-effective alternatives often suffice

- Plan for scale: What works for prototyping may not suit production; factor in migration paths

- Prioritize security: Your prompts and user data deserve protection; choose services with clear policies

The ecosystem around AI APIs continues evolving rapidly. New alternatives emerge regularly, pricing adjusts, and capabilities improve. Staying informed about options while maintaining security awareness positions you to make optimal choices as the landscape develops. For those considering the paid ChatGPT route, our ChatGPT Plus vs Pro comparison provides detailed analysis for developer use cases.

Frequently Asked Questions

Is there really free unlimited GPT-5.2 API access available?

No, OpenAI does not offer free unlimited API access. Services claiming to provide this are either using alternative models, operating unsustainably, or monetizing through concerning means like data collection. Legitimate free options include free tiers with rate limits (like ChatGPT's 10 messages per 5 hours) or alternative models like DeepSeek that match GPT-5.2 capability.

Can I use Puter.js for commercial projects?

Yes, Puter.js is designed for commercial use. The key consideration is that end users cover their own API costs through their authentication. This works well for B2B SaaS applications or consumer products where users expect to pay for AI features. Review their terms of service for your specific use case.

What is the cheapest way to use GPT-5.2 specifically?

Combining cached input tokens (90% discount), batch processing (50% discount), and an API proxy service (10-30% discount) can reduce GPT-5.2 costs by up to 95% for suitable workloads. For real-time applications, using GPT-5 Mini or Nano for simple tasks and reserving GPT-5.2 for complex tasks provides the best balance.

Are API proxy services like laozhang.ai safe to use?

Reputable proxy services with established track records, clear business models, and transparent operations are generally safe. They aggregate demand to negotiate volume discounts and pass savings to users. Verify legitimacy by checking for: clear company information, stated data handling policies, customer reviews, and sustainable business model explanation.

How do rate limits work on the free tier?

ChatGPT's free tier allows 10 GPT-5.2 messages every 5 hours. After reaching this limit, conversations automatically switch to a lighter model until your limit resets. API rate limits for free credits (if available) are typically very restrictive—around 3 requests per minute—making them suitable only for testing and evaluation.

What happens when I hit my rate limit?

On ChatGPT, you are downgraded to a mini model rather than blocked entirely. On the API, you receive a 429 error code requiring you to wait before retrying. Implement exponential backoff in your code to handle rate limits gracefully. For production applications, consider higher-tier accounts or distributing load across multiple API keys within OpenAI's terms of service.