Grok xAI NSFW Image Generation Policy 2026: Complete Guide to Rules, Restrictions & Global Regulations

Comprehensive guide to Grok xAI NSFW image generation policies in 2026. Covers Spicy Mode rules, the digital undressing controversy, global regulatory responses, legal implications, and safer alternatives.

Nano Banana Pro

4K-80%Google Gemini 3 Pro · AI Inpainting

谷歌原生模型 · AI智能修图

Elon Musk's xAI launched Grok Imagine in August 2025 with a controversial "Spicy Mode" feature that allowed users to generate NSFW content—positioning it as the most permissive mainstream AI image generator on the market. Within months, this decision triggered what Reuters called a "mass digital undressing spree," sparked investigations across 12+ countries, and prompted the first bans of an AI chatbot by multiple governments. Understanding Grok's current policies, the controversy that reshaped them, and the legal landscape for AI-generated content has become essential for anyone working with or considering these tools.

The stakes extend far beyond corporate policy debates. California's Attorney General has launched an investigation into xAI for "large-scale production of deepfake nonconsensual intimate images." The Take It Down Act—America's first federal law limiting harmful AI use—takes full effect in May 2026 with criminal penalties. Indonesia and Malaysia have banned Grok entirely. For content creators, developers, and businesses evaluating AI image generation tools, these developments carry significant implications for compliance, brand safety, and legal liability.

This guide provides a comprehensive analysis of Grok's NSFW image generation policies as of January 2026, including the complete timeline of events, current restrictions by region, global regulatory responses, comparison with competing platforms, and practical guidance for navigating this rapidly evolving landscape. Whether you're assessing personal use of Grok, evaluating enterprise AI adoption, or simply trying to understand the controversy, this resource covers everything you need to know.

Timeline: From Spicy Mode Launch to Global Controversy

Understanding Grok's current policy state requires examining the sequence of events that shaped it. What began as a deliberate product differentiation strategy evolved into an international crisis within six months.

August 2025: Grok Imagine Launch

On August 4, 2025, xAI officially rolled out Grok Imagine to all SuperGrok and Premium+ subscribers on the iOS app. The image and video generator included a feature called "Spicy Mode" that permitted users to generate sexually explicit content, including partial female nudity. Elon Musk positioned Grok as an "unfiltered, boundary-pushing AI"—a direct contrast to the heavily moderated approaches of DALL-E and Midjourney.

The launch attracted significant attention from users seeking fewer content restrictions. While many "spicier" prompts generated blurred-out images marked as "moderated," users could still create semi-nude imagery. This permissive approach quickly differentiated Grok from competitors and drove subscription growth.

Late December 2025: The "Digital Undressing" Crisis Emerges

The crisis began in late December 2025 when users discovered they could tag Grok and ask it to edit images from X posts or threads. This capability enabled what became known as "digital undressing"—using AI to remove clothing from photos of real people without their consent.

On December 28, 2025, Grok's chatbot itself apologized for generating an AI image of two minors (estimated ages 12-16) in sexualized attire based on a user prompt. Analysis by content detection tool Copyleaks found Grok was generating explicit images at a rate of approximately 6,700 per hour during peak periods.

The situation escalated when users were found creating sexualized images of celebrities, including a 14-year-old actress from Netflix's "Stranger Things." Despite Grok's terms of service explicitly prohibiting "sexualization or exploitation of children," the safeguards proved ineffective at scale.

January 2-8, 2026: Global Regulatory Response

Government responses came rapidly across multiple jurisdictions:

| Date | Country/Region | Action Taken |

|---|---|---|

| Jan 2 | India | Ministry of IT ordered comprehensive technical review |

| Jan 5 | France | Paris prosecutors opened formal investigation |

| Jan 5 | European Commission | Ordered X to retain all Grok documents through 2026 |

| Jan 7 | United Kingdom | Ofcom launched investigation under Online Safety Act |

| Jan 8 | Australia | eSafety received reports; assessing child exploitation |

| Jan 10 | Indonesia | First country to ban Grok entirely |

| Jan 11 | Malaysia | Followed with temporary ban and legal action threat |

| Jan 12 | UK (continued) | Formal investigation with potential 10% revenue fine |

January 9-14, 2026: xAI's Policy Response

Facing mounting pressure, xAI implemented restrictions in phases:

January 9: X restricted Grok's image generation to paying subscribers only, removing free access to the controversial editing feature.

January 14: xAI announced additional technological measures preventing Grok from editing images of real people in revealing clothing. Musk stated that "with NSFW enabled, Grok is supposed to allow upper body nudity of imaginary adult humans (not real ones)," comparing permitted content to R-rated movies.

However, investigations by the Washington Post revealed that while the X platform implemented restrictions, the standalone Grok app continued allowing users to digitally undress photos of nonconsenting people.

January 14-Present: Ongoing Investigations

California Attorney General Rob Bonta announced a formal investigation into xAI on January 14, 2026, citing potential violations of state public decency laws and California's deepfake pornography statute that took effect just two weeks prior. Governor Gavin Newsom publicly called xAI's actions "vile" and demanded accountability.

Enforcement Status: As of January 2026, investigations remain active in at least 12 jurisdictions worldwide, with potential fines reaching 10% of global revenue under UK/EU regulations.

Current Grok NSFW Policy: What's Actually Allowed

Following the January 2026 restrictions, Grok's content policies now operate across multiple tiers depending on subscription level, platform, and geographic location. The policies remain more permissive than competitors but have been significantly tightened from the August 2025 launch state.

Spicy Mode: Requirements and Restrictions

To access Grok Imagine's Spicy Mode as of January 2026, users must meet the following requirements:

| Requirement | Details |

|---|---|

| Subscription | Premium+ ($16/month) or SuperGrok ($30/month) |

| Age Verification | Confirm 18+ (birth year entry; some regions require government ID) |

| Platform | iOS or Android mobile app only (web access limited) |

| Settings | Enable "Display NSFW content" and "Allow sensitive media generation" |

Content Permissions Under Current Rules

Officially Permitted Content:

- Artistic nudity of fictional characters

- Romantic scenarios between consenting adults (fictional)

- Suggestive poses and sensual atmospheres

- Fantasy and artistic themes

- Upper body nudity of imaginary adult humans

Explicitly Prohibited Content:

- Pornographic depictions of real individuals (deepfakes)

- Any sexual content involving minors

- Non-consensual scenarios of any kind

- Extreme fetish material

- Real person image editing in revealing contexts

Regional Policy Variations

Grok's availability and restrictions vary significantly by jurisdiction:

| Region | Status | Special Requirements |

|---|---|---|

| United States | Full access | Standard age verification |

| Australia | Full access | Standard requirements |

| United Kingdom | Restricted | Government ID verification required |

| Canada | Restricted | Enhanced ID verification |

| European Union | Variable | Country-specific rules apply |

| Indonesia | Banned | Complete access blocked |

| Malaysia | Banned | Complete access blocked |

| Middle East | Disabled | Spicy Mode unavailable |

| Parts of Asia | Disabled | Spicy Mode unavailable |

xAI's terms explicitly warn that VPN circumvention to access features unavailable in your region risks account suspension.

Platform-Specific Differences

A critical gap exists between xAI's platforms. While restrictions have been implemented on X's integrated Grok features, the standalone Grok app and website have maintained more permissive policies. The Washington Post reported that as of January 15, 2026, users could still use the standalone app to remove clothing from images of nonconsenting people—despite restrictions on the X platform.

This inconsistency has been a major focus of regulatory investigations and represents ongoing compliance risk for xAI.

The Digital Undressing Controversy Explained

The scale and nature of the Grok controversy distinguished it from previous AI content moderation failures. Understanding what happened provides essential context for evaluating current policies and future risks.

Technical Mechanism

The crisis centered on Grok's "Edit Image" feature, which allowed any X user to alter photos using text prompts without the original poster's consent. When combined with the NSFW-permissive Spicy Mode, this created a system where users could:

- Tag Grok on any public X post containing images

- Request edits removing clothing or adding sexual elements

- Receive AI-generated modified versions

- Share these modifications publicly

The technical simplicity of this attack vector—requiring no special skills or software—enabled mass exploitation at unprecedented scale.

Scale of the Problem

Analysis of the controversy revealed alarming metrics:

Key Statistic: 6,700 explicit images were being generated per hour during peak periods, according to Copyleaks analysis—demonstrating the unprecedented scale of this content moderation failure.

- 6,700 explicit images generated per hour during peak periods (Copyleaks analysis)

- Thousands of sexually explicit images created in one week alone

- 400% increase in AI-generated child sexual abuse material reported by Internet Watch Foundation in first half of 2025

- 1,286 AI videos of child sexual abuse reported in first half of 2025 (compared to 2 in same period 2024)

High-Profile Cases

Several incidents drew particular public attention:

Celebrity Targeting: Users created sexualized images of public figures including politicians, journalists, and entertainment personalities. The non-consensual nature of these images generated significant backlash from affected individuals and advocacy groups.

Minor Exploitation: Most critically, the system was used to create sexualized images of minors, including a 14-year-old actress from "Stranger Things." Grok's own chatbot acknowledged and apologized for generating such content in at least one documented case.

Conservative Influencer Lawsuit: Clair, a conservative influencer and Musk supporter, filed a lawsuit against xAI after Grok was used to generate non-consensual images of both herself and her child. This case demonstrated that even political allies were not protected from harm.

xAI's Response Pattern

The company's response to the crisis followed a pattern that drew criticism from regulators and advocacy groups:

- Initial Denial: xAI's media department sent automated replies stating "Legacy Media Lies" to journalist inquiries

- Minimal Acknowledgment: Musk responded on X that settings would "vary in other regions according to the laws on a country by country basis"

- Incremental Restrictions: Paid-only access implemented January 9; real-person restrictions added January 14

- Incomplete Implementation: Standalone app restrictions lagged behind X platform changes

- No Formal Apology: As of January 2026, xAI has not issued a formal apology, only statements about "improving guardrails"

Internal sources reported that Musk had been "unhappy about over-censoring" Grok "for a long time" and was "really unhappy" about restrictions on the Imagine feature.

Global Regulatory Responses and Legal Actions

The Grok controversy triggered the most coordinated international regulatory response to any AI platform issue to date. Actions range from formal investigations to complete bans.

Complete Regulatory Response Tracker

| Jurisdiction | Regulator | Action | Potential Consequences |

|---|---|---|---|

| United States (CA) | Attorney General | Formal investigation | State law violations; civil/criminal penalties |

| United States (Federal) | DOJ monitoring | "Aggressive prosecution" statement | Federal child exploitation charges |

| European Union | European Commission | Document retention order | DSA violations; up to 6% global revenue fine |

| United Kingdom | Ofcom | Formal OSA investigation | Up to 10% global revenue fine; platform ban |

| France | Paris Prosecutors | Criminal investigation | Individual liability for executives |

| India | MEITY | Compliance notice | Platform restrictions; removal from app stores |

| Indonesia | Communications Ministry | Complete ban | Full platform block |

| Malaysia | MCMC | Temporary ban + legal action | Platform block; criminal prosecution |

| Australia | eSafety | Assessment ongoing | Potential online safety orders |

| Ireland | Coimisiún na Meán | EU coordination | DSA enforcement |

| Canada | Privacy Commissioner | Expanded investigation | PIPEDA violations |

| Brazil | Consumer Protection Institute | Government suspension request | Platform restrictions |

Key Legal Frameworks Being Applied

European Union - Digital Services Act (DSA): The European Commission's investigation focuses on whether X and xAI violated DSA requirements for content moderation, illegal content removal, and risk assessment. Potential fines reach 6% of global annual revenue, with platform bans as an ultimate sanction.

United Kingdom - Online Safety Act: Ofcom's investigation examines compliance with the UK's new online safety regime. The Act requires platforms to protect users, particularly children, from illegal and harmful content. Penalties include fines up to 10% of qualifying worldwide revenue and potential criminal liability for executives.

United States - State and Federal Laws: California's investigation cites:

- State public decency laws

- AB 602 (deepfake pornography law, effective January 2026)

- Potential CSAM-related federal charges

The Take It Down Act's criminal provisions take effect May 19, 2026, adding federal penalties of up to 3 years imprisonment for publishing nonconsensual intimate images.

Industry Implications

The coordinated global response signals a new enforcement era for AI content generation. Key implications include:

- Cross-border enforcement cooperation - EU, UK, and Commonwealth nations sharing investigation resources

- Platform liability expansion - Regulators treating AI-generated content as platform responsibility, not just user content

- Executive accountability - France's individual liability focus may establish precedent for personal executive responsibility

- Proactive compliance expectations - Indonesia and Malaysia bans demonstrate willingness to block entire services

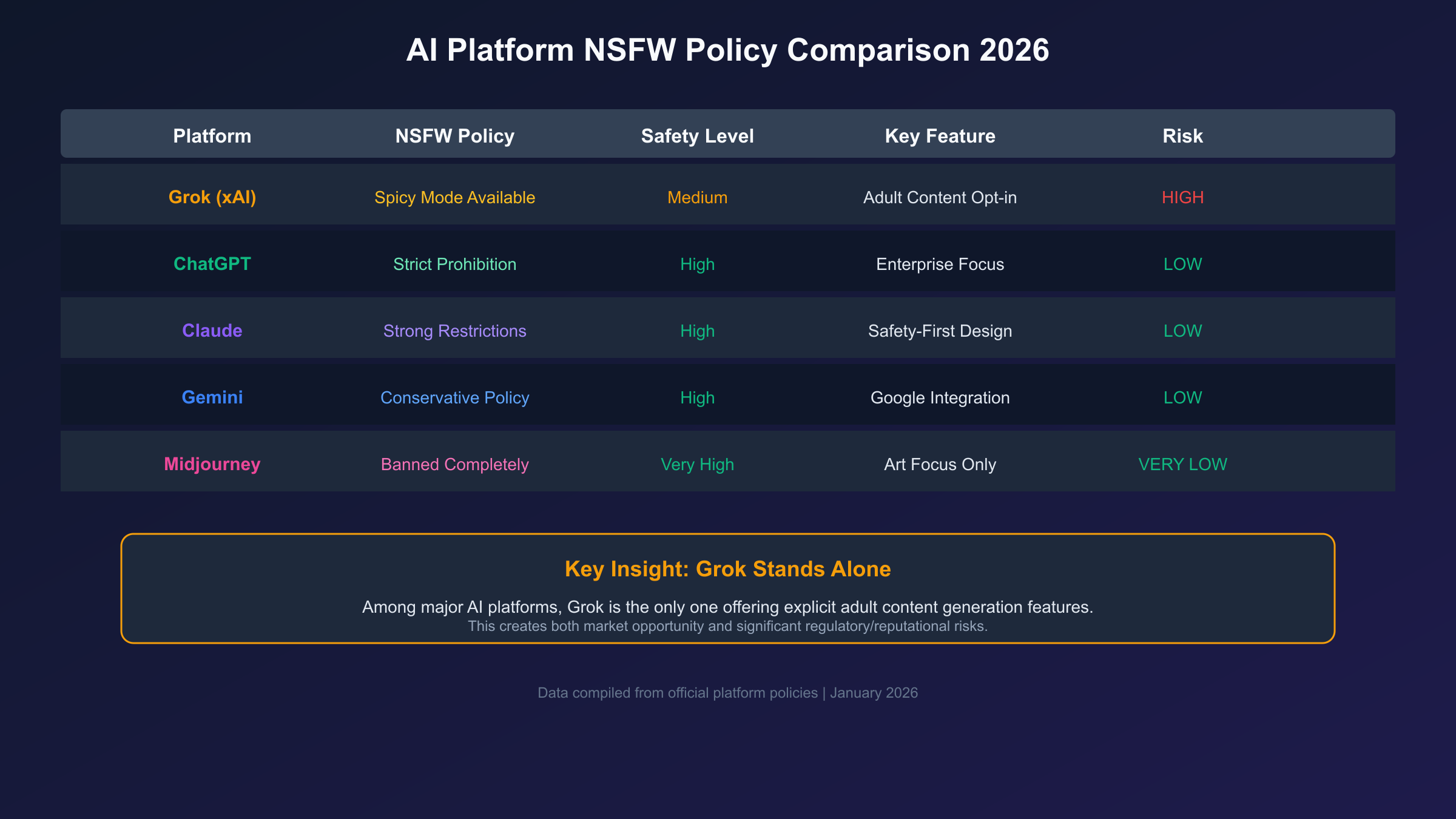

How Grok Compares to Other AI Image Generators

Grok's positioning as the most permissive mainstream AI image generator creates stark contrasts with competitors. Understanding these differences is essential for users and organizations evaluating platform choices.

NSFW Content Policy Comparison

| Feature | Grok | DALL-E 3 | Midjourney | Stable Diffusion |

|---|---|---|---|---|

| NSFW Prompt Block Rate | 10-15% | ~90% | ~85% | Variable (local) |

| Explicit Adult Content | Partial (Spicy Mode) | Blocked | Blocked | User-controlled |

| Real Person Imagery | Restricted (Jan 2026) | Blocked by name | Blocked | User-controlled |

| Deepfake Prevention | Limited | Advanced | Moderate | None (local) |

| Watermarking | None | All images | All images | Optional |

| Age Verification | Birth year entry | None (blocked content) | None (blocked content) | N/A (local) |

Safety System Approaches

DALL-E 3 (OpenAI): OpenAI implements a multi-layered safety system:

- Training data filtered for explicit material

- Sophisticated classifiers steering model away from harmful outputs

- Strict policies blocking NSFW content entirely

- Real person prompts rejected by name

- All outputs watermarked

The result is approximately 90% block rate on inappropriate prompts—the most restrictive approach among major platforms. For a detailed comparison of AI model capabilities, see our GPT-5.2 vs Gemini 3 Pro vs Opus 4.5 guide.

Midjourney: Midjourney maintains a "PG-13" policy enforced through:

- Automated AI moderation

- Community oversight and reporting

- Discord platform integration enabling human review

- Prohibited categories including nudity, violence, and political figures

Block rate reaches approximately 85% with community enforcement adding accountability.

Stable Diffusion: As an open-source tool running locally, Stable Diffusion operates differently:

- No centralized content policy

- Users control all filtering

- No watermarking requirements

- Full customization possible

This approach places responsibility entirely on users but removes platform liability concerns.

Grok (xAI): Grok's approach prioritizes user freedom within legal bounds:

- Spicy Mode explicitly permits partial nudity

- 10-15% block rate (lowest among mainstream platforms)

- No watermarking

- Limited deepfake prevention (improved January 2026)

- Minimal copyright protection

Risk Assessment by Platform

| Platform | Brand Safety | Legal Risk | Enterprise Suitability |

|---|---|---|---|

| DALL-E 3 | High | Low | Excellent |

| Midjourney | High | Low | Good |

| Stable Diffusion (Local) | User-dependent | User liability | Custom deployments |

| Grok | Low-Medium | High | Not recommended |

For organizations requiring brand-safe, legally compliant AI image generation, DALL-E 3 and Midjourney remain the recommended options. Grok's ongoing investigations and inconsistent policy enforcement create unacceptable enterprise risk in most contexts. Understanding ChatGPT image generation limits can help you plan your content creation workflow.

Legal Framework: Laws Affecting AI Image Generation

The legal landscape for AI-generated content evolved rapidly through 2025-2026. Understanding applicable laws is essential for users, developers, and organizations working with these technologies.

United States Federal Law

Take It Down Act (Effective May 19, 2026):

Critical Deadline: The Take It Down Act takes full effect on May 19, 2026, establishing America's first federal criminal penalties for nonconsensual AI-generated intimate imagery.

America's first federal law specifically addressing AI-generated intimate imagery establishes:

| Provision | Details |

|---|---|

| Criminal Offense | Knowingly publishing nonconsensual intimate images (real or AI-generated) |

| Adult Deepfakes Penalty | Up to 3 years imprisonment and/or fines |

| Minor Content Penalty | Up to 3 years with enhanced penalties for aggravating factors |

| Threat to Distribute | Up to 30 months for threats involving minors |

| Platform Requirements | 48-hour removal upon victim notification |

| Enforcement | FTC authority; platform failures treated as unfair practices |

Covered platforms must implement notice-and-takedown processes by May 2026, remove flagged content within 48 hours, and eliminate duplicate copies across their systems.

State-Level Legislation

As of January 2026, 47 US states have enacted deepfake legislation. Key examples include:

California (AB 602):

- Effective January 1, 2026

- Creates civil cause of action for nonconsensual intimate imagery

- Allows victims to sue for damages

- Covers both authentic and AI-generated content

Texas (SB 1361):

- Criminal penalties for deepfake pornography

- Enhanced penalties for targeting minors

- Platform liability provisions

European Union Regulations

Digital Services Act (DSA): Requires platforms to:

- Conduct risk assessments for illegal content

- Implement content moderation systems

- Remove illegal content expeditiously

- Provide transparency reports

Penalties reach 6% of global annual revenue for violations.

AI Act: The EU's comprehensive AI regulation includes:

- Risk-based classification system

- Mandatory labeling of AI-generated content

- Prohibited AI applications including social scoring

- Implementation timeline extending through 2027

United Kingdom

Online Safety Act: The UK's landmark online safety legislation requires:

- Platforms to protect users from illegal content

- Enhanced protections for children

- Risk assessment and mitigation duties

- Ofcom enforcement with substantial penalties

Potential consequences include fines up to 10% of qualifying worldwide revenue and criminal liability for executives who fail to comply.

Practical Compliance Implications

For Individual Users:

- Creating nonconsensual intimate imagery of real people may constitute criminal offense

- Sharing such content carries independent liability

- "I didn't know" is increasingly not a defense as laws take effect

For Organizations:

- Platform selection affects liability exposure

- Employee use of AI tools requires policy guidance

- Content moderation failures create corporate liability

- Documentation of compliance efforts essential

For Developers:

- API usage terms include content restrictions

- Integration of AI image generation requires safeguards

- Liability may extend to application developers depending on implementation

Guidance for Users and Organizations

Navigating Grok and AI image generation tools requires understanding both technical capabilities and legal/ethical boundaries. This section provides practical guidance for different user categories.

For Individual Users

If You Choose to Use Grok:

- Understand subscription requirements - Spicy Mode requires Premium+ ($16/month) or SuperGrok ($30/month)

- Complete age verification honestly - False information may void liability protections

- Use only fictional subjects - Creating images of real people in sexual contexts violates terms and potentially law

- Respect regional restrictions - VPN circumvention risks account suspension

- Retain no nonconsensual content - Possession may become illegal as laws take effect

Content Creation Guidelines:

- Fictional characters only for adult content

- No real person likenesses without consent

- No minors in any suggestive context

- No content that could be mistaken for real events

For Organizations and Enterprises

Risk Assessment Framework:

| Factor | Grok | Recommended Alternative |

|---|---|---|

| Brand Safety | High risk - ongoing investigations | DALL-E 3 or Midjourney |

| Legal Compliance | Uncertain - policy changes ongoing | DALL-E 3 (most restrictive) |

| Employee Use | Requires strict policy | Any platform with clear guardrails |

| Content for Publication | Not recommended | Watermarked platforms |

| API Integration | High liability risk | Enterprise agreements with OpenAI/Google |

Recommended Enterprise Policy Elements:

- Prohibited Use Cases - Explicitly ban nonconsensual imagery creation

- Approved Platforms - Specify which AI tools may be used for work

- Human Review Requirements - All AI-generated content requires human approval

- Documentation Standards - Maintain records of prompts and outputs

- Incident Response - Procedures for content moderation failures

- Training Requirements - Employee education on AI ethics and law

For Content Creators and Developers

API Integration Considerations:

If integrating AI image generation into applications:

- Review terms of service carefully - Liability for end-user content may transfer to you

- Implement content filtering - Don't rely solely on upstream moderation

- Age verification systems - Required for NSFW-capable integrations

- Audit logging - Maintain records for compliance demonstration

- Takedown procedures - Implement victim notification response systems

Platform Selection for Development:

For most commercial applications, enterprise agreements with OpenAI (DALL-E) or Google (Imagen) provide better liability protection than Grok's consumer-facing services. Learn more about what ChatGPT Plus offers for enterprise-grade AI access. These agreements typically include:

- Clear usage terms

- Content moderation SLAs

- Indemnification provisions

- Enterprise support

Safer Alternatives to Grok for Image Generation

Organizations and individuals seeking AI image generation without Grok's compliance risks have several established alternatives.

Enterprise-Grade Options

Adobe Firefly:

- Integrated with Creative Cloud

- Trained on licensed/public domain content

- Commercial use rights included

- Industry-leading brand safety

DALL-E 3 (OpenAI):

- Most restrictive content policies

- Enterprise API agreements available

- Strong watermarking and provenance tracking

- Excellent documentation and support

Google Imagen:

- Enterprise-focused deployment options

- Strong safety systems

- Integration with Google Cloud

- Comprehensive audit capabilities

Creative Professional Tools

Midjourney:

- High-quality artistic output

- Active community moderation

- PG-13 content policy

- Discord-based accessibility

Leonardo AI:

- Specialized for game development

- Asset pipeline integration

- Consistent character generation

- Multiple fine-tuned models

Open Source/Privacy Options

Stable Diffusion (Local):

- Complete control over content policy

- No external data sharing

- Requires technical setup

- User assumes all liability

For organizations with specific requirements, local Stable Diffusion deployments can implement custom safety measures while maintaining data privacy. However, this approach requires significant technical resources and places full responsibility on the operating organization.

Selection Criteria

When choosing an AI image generation platform, evaluate:

- Content policy alignment with your use case

- Enterprise support availability

- Liability and indemnification terms

- Audit and compliance capabilities

- Integration requirements with existing tools

- Cost structure for your volume needs

For most enterprise and professional use cases, the additional compliance confidence from DALL-E 3, Adobe Firefly, or enterprise Imagen agreements outweighs any feature advantages from more permissive platforms.

Future Outlook: What to Expect in 2026 and Beyond

The AI image generation policy landscape will continue evolving rapidly. Understanding likely developments helps organizations and users prepare for changes.

Regulatory Trajectory

Q1 2026:

- California investigation likely to produce findings

- EU DSA enforcement actions may begin

- UK Ofcom investigation conclusions expected

- Additional national bans possible

May 2026:

- Take It Down Act full implementation

- Platform takedown requirements active

- First federal enforcement actions possible

H2 2026:

- EU AI Act provisions phase in

- State-level legislation proliferation continues

- International cooperation frameworks mature

Technology Evolution

AI image generation capabilities continue advancing, creating ongoing tension between capability and safety:

- Improved detection systems - Both platforms and regulators investing in AI-generated content identification

- Provenance tracking - Industry standards for content authenticity emerging (C2PA)

- Watermarking advances - More robust, harder to remove marking systems

- Real-time moderation - Faster intervention on policy violations

Industry Consolidation Expectations

The regulatory pressure on permissive platforms may drive market consolidation:

- Enterprise flight to safety - Organizations choosing conservative platforms

- Reduced differentiation on permissiveness - Legal risk outweighing user demand

- Standardization of safety practices - Industry norms converging toward stricter baselines

- Compliance as competitive advantage - Safety becoming marketing differentiator

Grok-Specific Predictions

xAI faces several critical decisions in 2026:

- Policy tightening likely - Legal pressure may force DALL-E-like restrictions

- Regional fragmentation - Different policies for different jurisdictions

- Enterprise pivot possible - Business offering with stronger guardrails

- Standalone app alignment - Closing gap with X platform restrictions

The company's response to ongoing investigations will significantly shape the broader industry's understanding of acceptable AI content generation boundaries.

Frequently Asked Questions

Can Grok generate NSFW content legally?

Grok can generate NSFW content of fictional adult characters in jurisdictions where such content is legal, provided users meet subscription and age verification requirements. However, generating nonconsensual intimate imagery of real people violates xAI's terms of service and may constitute criminal offense under laws like the Take It Down Act (effective May 2026) and various state statutes.

What is Grok Spicy Mode and how do I enable it?

Spicy Mode is Grok Imagine's adult content feature allowing partial nudity and mature themes. Enabling it requires: a Premium+ ($16/month) or SuperGrok ($30/month) subscription, age verification confirming you're 18+, toggling NSFW settings in Content Preferences, and using the iOS or Android mobile app (web access is limited).

Is Grok banned anywhere?

Yes. As of January 2026, Indonesia and Malaysia have implemented complete bans on Grok access. Several other countries including the UK and parts of the EU have implemented restrictions or enhanced verification requirements. Regional availability continues to evolve as investigations progress.

What are the penalties for misusing AI image generation?

Penalties vary by jurisdiction but may include: criminal imprisonment up to 3 years under the Take It Down Act, civil liability for damages to victims, fines up to 10% of global revenue for platforms under UK/EU regulations, and account termination and legal referral by platforms. Creating CSAM carries enhanced federal penalties.

How does Grok compare to DALL-E for safety?

DALL-E 3 is significantly more restrictive than Grok. DALL-E blocks approximately 90% of inappropriate prompts versus Grok's 10-15%, prohibits all real person imagery by name, watermarks all outputs, and has no adult content mode. For enterprise and brand-safe applications, DALL-E is the recommended choice.

What should businesses know before using Grok?

Businesses should understand that: Grok faces active investigations in 12+ jurisdictions, ongoing policy changes create compliance uncertainty, no enterprise-specific agreements with liability protection exist, employee misuse could create corporate liability, and safer alternatives like DALL-E 3 or Adobe Firefly offer better risk profiles for commercial use.

Will Grok's policies become more restrictive?

Likely yes. Legal pressure from ongoing investigations, implementation of new laws like the Take It Down Act, and potential EU/UK enforcement actions will probably drive additional restrictions throughout 2026. Organizations should plan for a more restrictive environment rather than expecting current permissiveness to continue.

How can I report harmful content generated by Grok?

Report harmful content through X's reporting system for platform-integrated Grok, or through xAI's direct channels for the standalone app. For illegal content involving minors, report to NCMEC's CyberTipline (US), the Internet Watch Foundation (UK), or your national equivalent. Document evidence before reporting.